Category: AWS

Building Production-Ready Release Pipelines in AWS: A Step-by-Step Guide

Building a robust, production-ready release pipeline in AWS requires careful planning, proper configuration, and adherence to best practices. This comprehensive guide will walk you through creating an enterprise-grade release pipeline using AWS native services, focusing on real-world production scenarios.

Architecture Overview

Our production pipeline will deploy a web application to EC2 instances behind an Application Load Balancer, implementing blue/green deployment strategies for zero-downtime releases. The pipeline will include multiple environments (development, staging, production) with appropriate gates and approvals.

GitHub → CodePipeline → CodeBuild (Build & Test) → CodeDeploy (Dev) → Manual Approval → CodeDeploy (Staging) → Automated Testing → Manual Approval → CodeDeploy (Production Blue/Green)

Prerequisites

Before we begin, ensure you have:

- AWS CLI configured with appropriate permissions

- A GitHub repository with your application code

- Basic understanding of AWS IAM, EC2, and Load Balancers

- A web application ready for deployment (we’ll use a Node.js example)

Step 1: Setting Up IAM Roles and Policies

CodePipeline Service Role

First, create an IAM role for CodePipeline with the necessary permissions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetBucketVersioning",

"s3:GetObject",

"s3:GetObjectVersion",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"codebuild:BatchGetBuilds",

"codebuild:StartBuild"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"codedeploy:CreateDeployment",

"codedeploy:GetApplication",

"codedeploy:GetApplicationRevision",

"codedeploy:GetDeployment",

"codedeploy:GetDeploymentConfig",

"codedeploy:RegisterApplicationRevision"

],

"Resource": "*"

}

]

}

CodeBuild Service Role

Create a role for CodeBuild with permissions to access ECR, S3, and CloudWatch:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectVersion",

"s3:PutObject"

],

"Resource": "*"

}

]

}

CodeDeploy Service Role

Create a service role for CodeDeploy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:*",

"ec2:*",

"elasticloadbalancing:*",

"tag:GetResources"

],

"Resource": "*"

}

]

}

Step 2: Infrastructure Setup

Create S3 Bucket for Artifacts

aws s3 mb s3://your-company-codepipeline-artifacts-bucket

aws s3api put-bucket-versioning \

--bucket your-company-codepipeline-artifacts-bucket \

--versioning-configuration Status=Enabled

Launch EC2 Instances

Create EC2 instances for each environment with the CodeDeploy agent installed:

# User data script for EC2 instances #!/bin/bash yum update -y yum install -y ruby wget # Install CodeDeploy agent cd /home/ec2-user wget https://aws-codedeploy-us-east-1.s3.us-east-1.amazonaws.com/latest/install chmod +x ./install ./install auto # Install Node.js (for our example application) curl -sL https://rpm.nodesource.com/setup_18.x | bash - yum install -y nodejs # Start CodeDeploy agent service codedeploy-agent start

Create Application Load Balancer

Set up an Application Load Balancer for blue/green deployments:

aws elbv2 create-load-balancer \

--name production-alb \

--subnets subnet-12345678 subnet-87654321 \

--security-groups sg-12345678

aws elbv2 create-target-group \

--name production-blue-tg \

--protocol HTTP \

--port 3000 \

--vpc-id vpc-12345678 \

--health-check-path /health

aws elbv2 create-target-group \

--name production-green-tg \

--protocol HTTP \

--port 3000 \

--vpc-id vpc-12345678 \

--health-check-path /health

Step 3: CodeBuild Configuration

Create a buildspec.yml file in your repository root:

version: 0.2

phases:

install:

runtime-versions:

nodejs: 18

pre_build:

commands:

- echo Logging in to Amazon ECR...

- echo Build started on `date`

- echo Installing dependencies...

- npm install

build:

commands:

- echo Build started on `date`

- echo Running tests...

- npm test

- echo Building the application...

- npm run build

post_build:

commands:

- echo Build completed on `date`

- echo Creating deployment package...

artifacts:

files:

- '**/*'

exclude:

- node_modules/**/*

- .git/**/*

- '*.md'

name: myapp-$(date +%Y-%m-%d)

Create CodeBuild Project

aws codebuild create-project \

--name "myapp-build" \

--source type=CODEPIPELINE \

--artifacts type=CODEPIPELINE \

--environment type=LINUX_CONTAINER,image=aws/codebuild/amazonlinux2-x86_64-standard:3.0,computeType=BUILD_GENERAL1_MEDIUM \

--service-role arn:aws:iam::123456789012:role/CodeBuildServiceRole

Step 4: CodeDeploy Applications and Deployment Groups

Create CodeDeploy Application

aws deploy create-application \

--application-name myapp \

--compute-platform Server

Create Deployment Groups

Development Environment:

aws deploy create-deployment-group \

--application-name myapp \

--deployment-group-name development \

--service-role-arn arn:aws:iam::123456789012:role/CodeDeployServiceRole \

--ec2-tag-filters Type=KEY_AND_VALUE,Key=Environment,Value=Development \

--deployment-config-name CodeDeployDefault.AllAtOne

Staging Environment:

aws deploy create-deployment-group \

--application-name myapp \

--deployment-group-name staging \

--service-role-arn arn:aws:iam::123456789012:role/CodeDeployServiceRole \

--ec2-tag-filters Type=KEY_AND_VALUE,Key=Environment,Value=Staging \

--deployment-config-name CodeDeployDefault.AllAtOne

Production Environment (Blue/Green):

aws deploy create-deployment-group \

--application-name myapp \

--deployment-group-name production \

--service-role-arn arn:aws:iam::123456789012:role/CodeDeployServiceRole \

--blue-green-deployment-configuration '{

"terminateBlueInstancesOnDeploymentSuccess": {

"action": "TERMINATE",

"terminationWaitTimeInMinutes": 5

},

"deploymentReadyOption": {

"actionOnTimeout": "CONTINUE_DEPLOYMENT"

},

"greenFleetProvisioningOption": {

"action": "COPY_AUTO_SCALING_GROUP"

}

}' \

--load-balancer-info targetGroupInfoList='[{

"name": "production-blue-tg"

}]' \

--deployment-config-name CodeDeployDefault.BlueGreenAllAtOnce

Step 5: Application Configuration Files

AppSpec File

Create an appspec.yml file for CodeDeploy:

version: 0.0

os: linux

files:

- source: /

destination: /var/www/myapp

overwrite: yes

permissions:

- object: /var/www/myapp

owner: ec2-user

group: ec2-user

mode: 755

hooks:

BeforeInstall:

- location: scripts/install_dependencies.sh

timeout: 300

runas: root

ApplicationStart:

- location: scripts/start_server.sh

timeout: 300

runas: ec2-user

ApplicationStop:

- location: scripts/stop_server.sh

timeout: 300

runas: ec2-user

ValidateService:

- location: scripts/validate_service.sh

timeout: 300

runas: ec2-user

Deployment Scripts

Create a scripts/ directory with the following files:

scripts/install_dependencies.sh:

#!/bin/bash cd /var/www/myapp npm install --production

scripts/start_server.sh:

#!/bin/bash cd /var/www/myapp pm2 stop all pm2 start ecosystem.config.js --env production

scripts/stop_server.sh:

#!/bin/bash pm2 stop all

scripts/validate_service.sh:

#!/bin/bash

# Wait for the application to start

sleep 30

# Check if the application is responding

curl -f http://localhost:3000/health

if [ $? -eq 0 ]; then

echo "Application is running successfully"

exit 0

else

echo "Application failed to start"

exit 1

fi

Step 6: Create the CodePipeline

Pipeline Configuration

{

"pipeline": {

"name": "myapp-production-pipeline",

"roleArn": "arn:aws:iam::123456789012:role/CodePipelineServiceRole",

"artifactStore": {

"type": "S3",

"location": "your-company-codepipeline-artifacts-bucket"

},

"stages": [

{

"name": "Source",

"actions": [

{

"name": "Source",

"actionTypeId": {

"category": "Source",

"owner": "ThirdParty",

"provider": "GitHub",

"version": "1"

},

"configuration": {

"Owner": "your-github-username",

"Repo": "your-repo-name",

"Branch": "main",

"OAuthToken": "{{resolve:secretsmanager:github-oauth-token}}"

},

"outputArtifacts": [

{

"name": "SourceOutput"

}

]

}

]

},

{

"name": "Build",

"actions": [

{

"name": "Build",

"actionTypeId": {

"category": "Build",

"owner": "AWS",

"provider": "CodeBuild",

"version": "1"

},

"configuration": {

"ProjectName": "myapp-build"

},

"inputArtifacts": [

{

"name": "SourceOutput"

}

],

"outputArtifacts": [

{

"name": "BuildOutput"

}

]

}

]

},

{

"name": "DeployToDev",

"actions": [

{

"name": "Deploy",

"actionTypeId": {

"category": "Deploy",

"owner": "AWS",

"provider": "CodeDeploy",

"version": "1"

},

"configuration": {

"ApplicationName": "myapp",

"DeploymentGroupName": "development"

},

"inputArtifacts": [

{

"name": "BuildOutput"

}

]

}

]

},

{

"name": "ApprovalForStaging",

"actions": [

{

"name": "ManualApproval",

"actionTypeId": {

"category": "Approval",

"owner": "AWS",

"provider": "Manual",

"version": "1"

},

"configuration": {

"CustomData": "Please review the development deployment and approve for staging"

}

}

]

},

{

"name": "DeployToStaging",

"actions": [

{

"name": "Deploy",

"actionTypeId": {

"category": "Deploy",

"owner": "AWS",

"provider": "CodeDeploy",

"version": "1"

},

"configuration": {

"ApplicationName": "myapp",

"DeploymentGroupName": "staging"

},

"inputArtifacts": [

{

"name": "BuildOutput"

}

]

}

]

},

{

"name": "StagingTests",

"actions": [

{

"name": "IntegrationTests",

"actionTypeId": {

"category": "Build",

"owner": "AWS",

"provider": "CodeBuild",

"version": "1"

},

"configuration": {

"ProjectName": "myapp-integration-tests"

},

"inputArtifacts": [

{

"name": "SourceOutput"

}

]

}

]

},

{

"name": "ApprovalForProduction",

"actions": [

{

"name": "ManualApproval",

"actionTypeId": {

"category": "Approval",

"owner": "AWS",

"provider": "Manual",

"version": "1"

},

"configuration": {

"CustomData": "Please review staging tests and approve for production deployment"

}

}

]

},

{

"name": "DeployToProduction",

"actions": [

{

"name": "Deploy",

"actionTypeId": {

"category": "Deploy",

"owner": "AWS",

"provider": "CodeDeploy",

"version": "1"

},

"configuration": {

"ApplicationName": "myapp",

"DeploymentGroupName": "production"

},

"inputArtifacts": [

{

"name": "BuildOutput"

}

]

}

]

}

]

}

}

Create the Pipeline

aws codepipeline create-pipeline --cli-input-json file://pipeline-config.json

Step 7: Production Considerations

Monitoring and Alerting

Set up CloudWatch alarms for pipeline failures:

aws cloudwatch put-metric-alarm \

--alarm-name "CodePipeline-Failure" \

--alarm-description "Alert on pipeline failure" \

--metric-name PipelineExecutionFailure \

--namespace AWS/CodePipeline \

--statistic Sum \

--period 300 \

--threshold 1 \

--comparison-operator GreaterThanOrEqualToThreshold \

--dimensions Name=PipelineName,Value=myapp-production-pipeline \

--alarm-actions arn:aws:sns:us-east-1:123456789012:pipeline-alerts

Rollback Strategy

Implement automatic rollback capabilities:

# In buildspec.yml, add rollback script generation

post_build:

commands:

- echo "Generating rollback script..."

- |

cat > rollback.sh << 'EOF'

#!/bin/bash

aws deploy stop-deployment --deployment-id $1 --auto-rollback-enabled

EOF

- chmod +x rollback.sh

Security Best Practices

- Use AWS Secrets Manager for sensitive configuration:

aws secretsmanager create-secret \

--name myapp/production/database \

--description "Production database credentials" \

--secret-string '{"username":"admin","password":"securepassword"}'

- Implement least privilege IAM policies

- Enable AWS CloudTrail for audit logging

- Use VPC endpoints for secure communication between services

Performance Optimization

- Use CodeBuild cache to speed up builds:

# In buildspec.yml

cache:

paths:

- '/root/.npm/**/*'

- 'node_modules/**/*'

- Implement parallel deployments for multiple environments

- Use CodeDeploy deployment configurations for optimized rollout strategies

Disaster Recovery

- Cross-region artifact replication:

aws s3api put-bucket-replication \

--bucket your-company-codepipeline-artifacts-bucket \

--replication-configuration file://replication-config.json

- Automated backup of deployment configurations

- Multi-region deployment capabilities

Step 8: Testing the Pipeline

Initial Deployment

- Push code to your GitHub repository

- Monitor the pipeline execution in the AWS Console

- Verify each stage completes successfully

- Test the deployed application in each environment

Validate Blue/Green Deployment

- Make a code change and push to repository

- Approve the production deployment

- Verify traffic switches to green environment

- Confirm old blue instances are terminated

Troubleshooting Common Issues

CodeDeploy Agent Issues

# Check agent status sudo service codedeploy-agent status # View agent logs sudo tail -f /var/log/aws/codedeploy-agent/codedeploy-agent.log

Permission Issues

- Verify IAM roles have correct policies attached

- Check S3 bucket policies allow pipeline access

- Ensure EC2 instances have proper instance profiles

Deployment Failures

- Review CodeDeploy deployment logs in CloudWatch

- Check application logs on target instances

- Verify health check endpoints are responding

Conclusion

This production-ready AWS release pipeline provides a robust foundation for enterprise deployments. Key benefits include:

- Zero-downtime deployments through blue/green strategies

- Multiple environment promotion with manual approvals

- Comprehensive monitoring and alerting

- Automated rollback capabilities

- Security best practices implementation

Remember to regularly review and update your pipeline configuration, monitor performance metrics, and continuously improve your deployment processes based on team feedback and operational requirements.

The pipeline can be extended with additional features such as automated security scanning, performance testing, and integration with other AWS services as your requirements evolve.

How to Deploy Another VPC in AWS with Scalable EC2’s for HA using Terraform

So we are going to do this a bit different than the other post. As the other post is just deploying one instance in an existing VPC.

This one is more fun. The structure we will use this time will allow you to scale your ec2 instances very cleanly. If you are using git repos to push out changes. Then having a main.tf for your instance is much simpler to manage at scale.

File structure:

terraform-project/

├── main.tf <– Your main configuration file

├── variables.tf <– Variables file that has the inputs to pass

├── outputs.tf <– Outputs file

├── security_group.tf <– File containing security group rules

└── modules/

└── instance/

├── main.tf <- this file contains your ec2 instances

└── variables.tf <- variable file that defines we will pass for the module in main.tf to use

Explaining the process:

Main.tf

provider “aws“ {

region = “us-west-2”

}

resource “aws_key_pair“ “my-nick-test-key” {

key_name = “my-nick-test-key”

public_key = file(“${path.module}/terraform-aws-key.pub”)

}

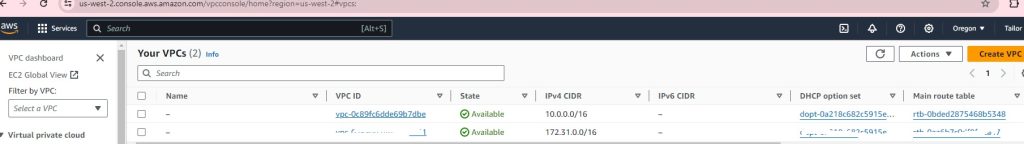

resource “aws_vpc“ “vpc2” {

cidr_block = “10.0.0.0/16”

}

resource “aws_subnet“ “newsubnet“ {

vpc_id = aws_vpc.vpc2.id

cidr_block = “10.0.1.0/24”

map_public_ip_on_launch = true

}

module “web_server“ {

source = “./module/instance”

ami_id = var.ami_id

instance_type = var.instance_type

key_name = var.key_name_instance

subnet_id = aws_subnet.newsubnet.id

instance_count = 2 // Specify the number of instances you want

security_group_id = aws_security_group.newcpanel.id

}

Variables.tf

variable “ami_id“ {

description = “The AMI ID for the instance”

default = “ami-0913c47048d853921” // Amazon Linux 2 AMI ID

}

variable “instance_type“ {

description = “The instance type for the instance”

default = “t2.micro“

}

variable “key_name_instance“ {

description = “The key pair name for the instance”

default = “my-nick-test-key”

}

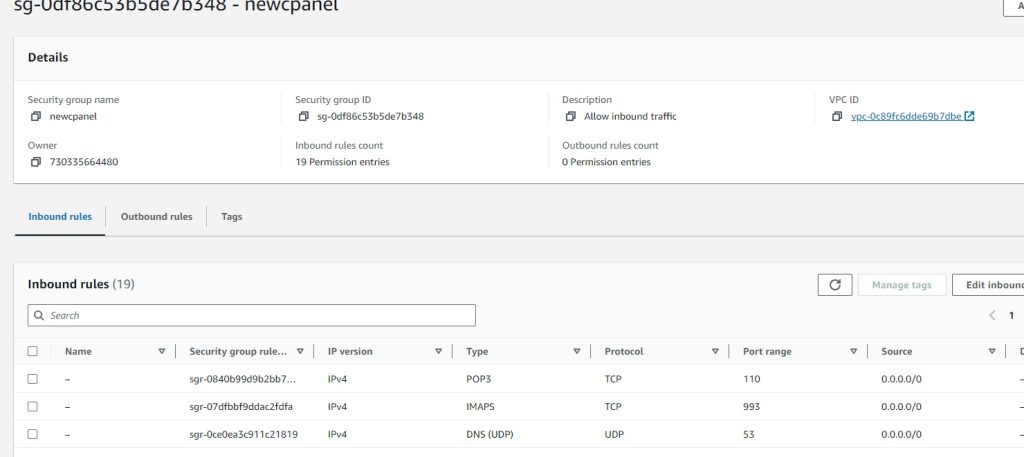

Security_group.tf

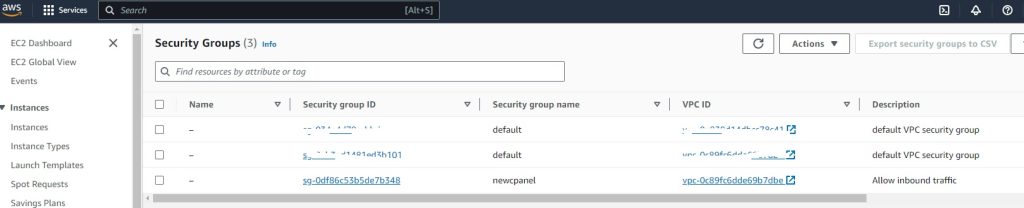

resource “aws_security_group“ “newcpanel“ {

name = “newcpanel“

description = “Allow inbound traffic”

vpc_id = aws_vpc.vpc2.id

// POP3 TCP 110

ingress {

from_port = 110

to_port = 110

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 20

ingress {

from_port = 20

to_port = 20

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 587

ingress {

from_port = 587

to_port = 587

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// DNS (TCP) TCP 53

ingress {

from_port = 53

to_port = 53

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// SMTPS TCP 465

ingress {

from_port = 465

to_port = 465

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// HTTPS TCP 443

ingress {

from_port = 443

to_port = 443

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// DNS (UDP) UDP 53

ingress {

from_port = 53

to_port = 53

protocol = “udp“

cidr_blocks = [“0.0.0.0/0”]

}

// IMAP TCP 143

ingress {

from_port = 143

to_port = 143

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// IMAPS TCP 993

ingress {

from_port = 993

to_port = 993

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 21

ingress {

from_port = 21

to_port = 21

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2086

ingress {

from_port = 2086

to_port = 2086

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2096

ingress {

from_port = 2096

to_port = 2096

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// HTTP TCP 80

ingress {

from_port = 80

to_port = 80

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// SSH TCP 22

ingress {

from_port = 22

to_port = 22

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// POP3S TCP 995

ingress {

from_port = 995

to_port = 995

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2083

ingress {

from_port = 2083

to_port = 2083

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2087

ingress {

from_port = 2087

to_port = 2087

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2095

ingress {

from_port = 2095

to_port = 2095

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

// Custom TCP 2082

ingress {

from_port = 2082

to_port = 2082

protocol = “tcp“

cidr_blocks = [“0.0.0.0/0”]

}

}

output “newcpanel_sg_id“ {

value = aws_security_group.newcpanel.id

description = “The ID of the security group ‘newcpanel‘”

}

Outputs.tf

output “public_ips“ {

value = module.web_server.public_ips

description = “List of public IP addresses for the instances.”

}

Okay so now we want to create the scalable ec2

We create a modules/instance directory and inside here define the instances as resources

modules/instance/main.tf

resource “aws_instance“ “Tailor-Server” {

count = var.instance_count // Control the number of instances with a variable

ami = var.ami_id

instance_type = var.instance_type

subnet_id = var.subnet_id

key_name = var.key_name

vpc_security_group_ids = [var.security_group_id]

tags = {

Name = format(“Tailor-Server%02d”, count.index + 1) // Naming instances with a sequential number

}

root_block_device {

volume_type = “gp2”

volume_size = 30

delete_on_termination = true

}

}

Modules/instance/variables.tf

Each variable serves as an input that can be set externally when the module is called, allowing for flexibility and reusability of the module across different environments or scenarios.

So here we defining it as a list of items we need to pass for the module to work. We will later provide the actual parameter to pass to the variables being called in the main.tf

Cheat sheet:

ami_id: Specifies the Amazon Machine Image (AMI) ID that will be used to launch the EC2 instances. The AMI determines the operating system and software configurations that will be loaded onto the instances when they are created.

instance_type: Determines the type of EC2 instance to launch. This affects the computing resources available to the instance (CPU, memory, etc.).

Type: It is expected to be a string that matches one of AWS’s predefined instance types (e.g., t2.micro, m5.large).

key_name: Specifies the name of the key pair to be used for SSH access to the EC2 instances. This key should already exist in the AWS account.

subnet_id: Identifies the subnet within which the EC2 instances will be launched. The subnet is part of a specific VPC (Virtual Private Cloud).

instance_names: A list of names to be assigned to the instances. This helps in identifying the instances within the AWS console or when querying using the AWS CLI.

security_group_Id: Specifies the ID of the security group to attach to the EC2 instances. Security groups act as a virtual firewall for your instances to control inbound and outbound traffic.

variable “ami_id“ {}

variable “instance_type“ {}

variable “key_name“ {}

variable “subnet_id“ {}

variable “instance_names“ {

type = list(string)

description = “List of names for the instances to create.”

}

variable “security_group_id“ {

description = “Security group ID to assign to the instance”

type = string

}

variable “instance_count“ {

description = “The number of instances to create”

type = number

default = 1 // Default to one instance if not specified

}

Time to deploy your code: I didnt bother showing the plan here just the apply

my-terraform-vpc$ terraform apply

Do you want to perform these actions?

Terraform will perform the actions described above.

Only ‘yes’ will be accepted to approve.

Enter a value: yes

aws_subnet.newsubnet: Destroying… [id=subnet-016181a8999a58cb4]

aws_subnet.newsubnet: Destruction complete after 1s

aws_subnet.newsubnet: Creating…

aws_subnet.newsubnet: Still creating… [10s elapsed]

aws_subnet.newsubnet: Creation complete after 11s [id=subnet-0a5914443d2944510]

module.web_server.aws_instance.Tailor-Server[1]: Creating…

module.web_server.aws_instance.Tailor-Server[0]: Creating…

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [10s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Still creating… [10s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Still creating… [20s elapsed]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [20s elapsed]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [30s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Still creating… [30s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Still creating… [40s elapsed]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [40s elapsed]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [50s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Still creating… [50s elapsed]

module.web_server.aws_instance.Tailor-Server[0]: Creation complete after 52s [id=i-0d103937dcd1ce080]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [1m0s elapsed]

module.web_server.aws_instance.Tailor-Server[1]: Still creating… [1m10s elapsed]

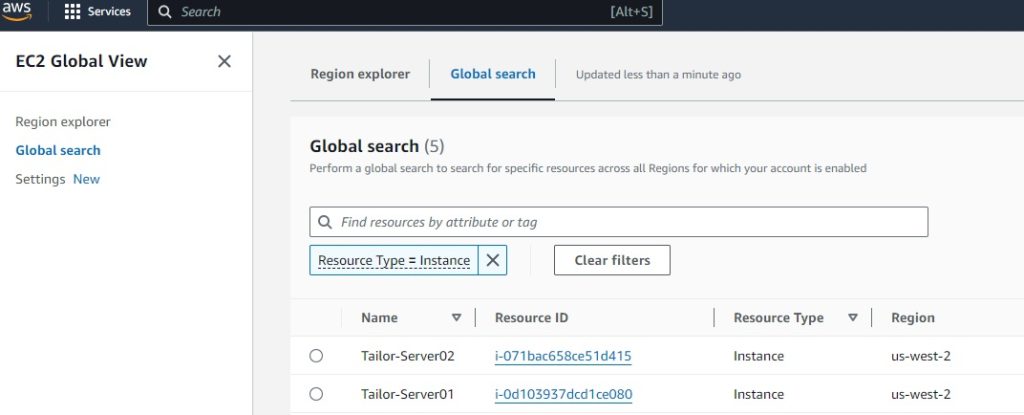

module.web_server.aws_instance.Tailor-Server[1]: Creation complete after 1m12s [id=i-071bac658ce51d415]

Apply complete! Resources: 3 added, 0 changed, 1 destroyed.

Outputs:

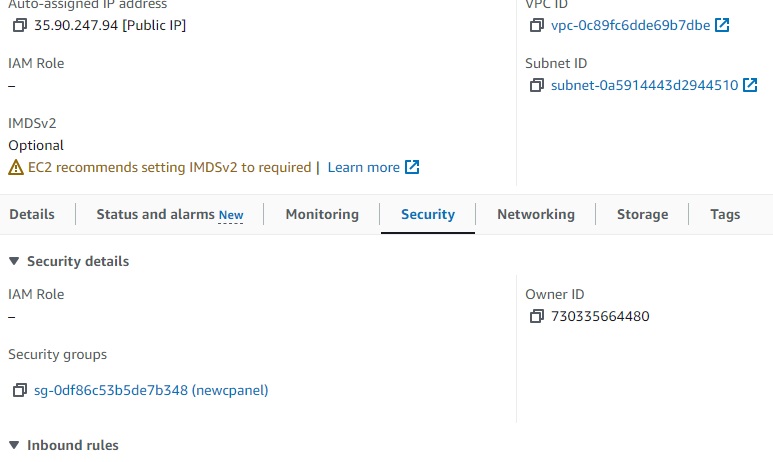

newcpanel_sg_id = “sg-0df86c53b5de7b348”

public_ips = [

“34.219.34.165”,

“35.90.247.94”,

]

Results:

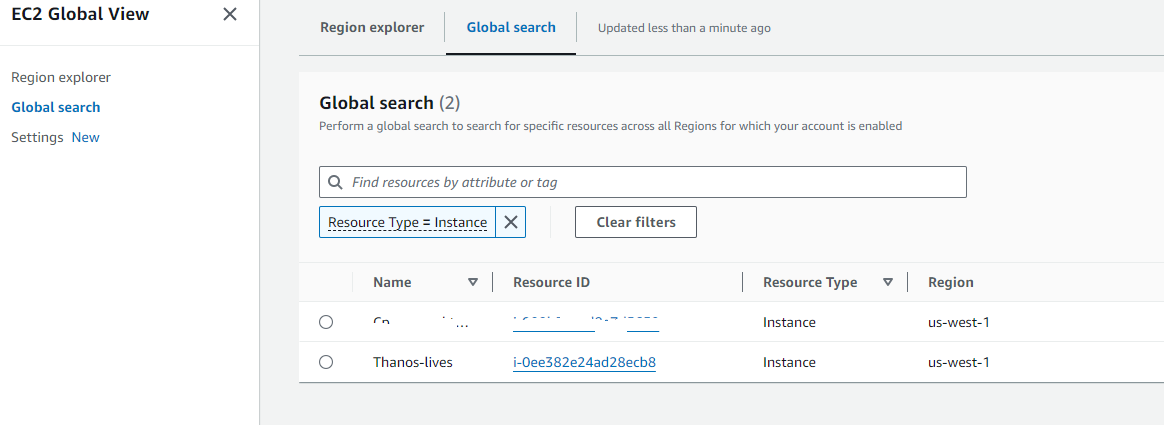

VPC successful:

EC2 successful:

Security-Groups:

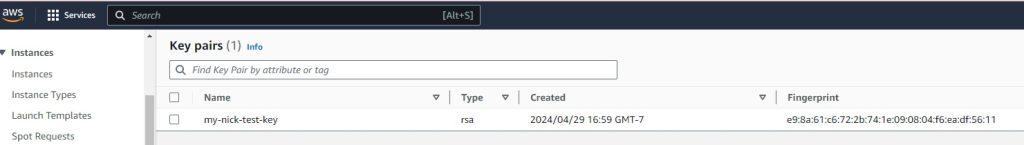

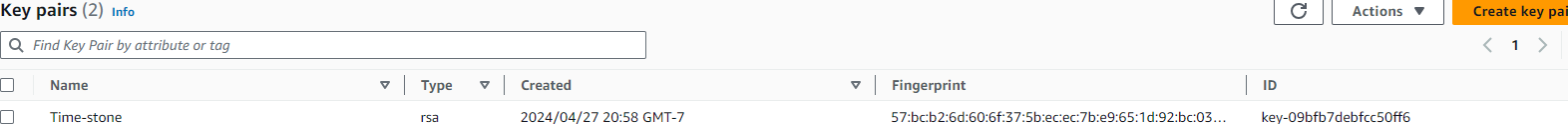

Key Pairs:

Ec2 assigned SG group:

How to deploy an EC2 instance in AWS with Terraform

- How to install terraform

- How to configure your aws cli

- How to steup your file structure

- How to deploy your instance

- You must have an AWS account already setup

- You have an existing VPC

- You have existing security groups

Depending on which machine you like to use. I use varied distros for fun.

For this we will use Ubuntu 22.04

How to install terraform

- Once you are logged into your linux jump box or whatever you choose to manage.

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg –dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo “deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main” | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

ThanosJumpBox:~/myterraform$ terraform -v

Terraform v1.8.2

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v5.47.

- Okay next you want to install the awscli

sudo apt update

sudo apt install awscli

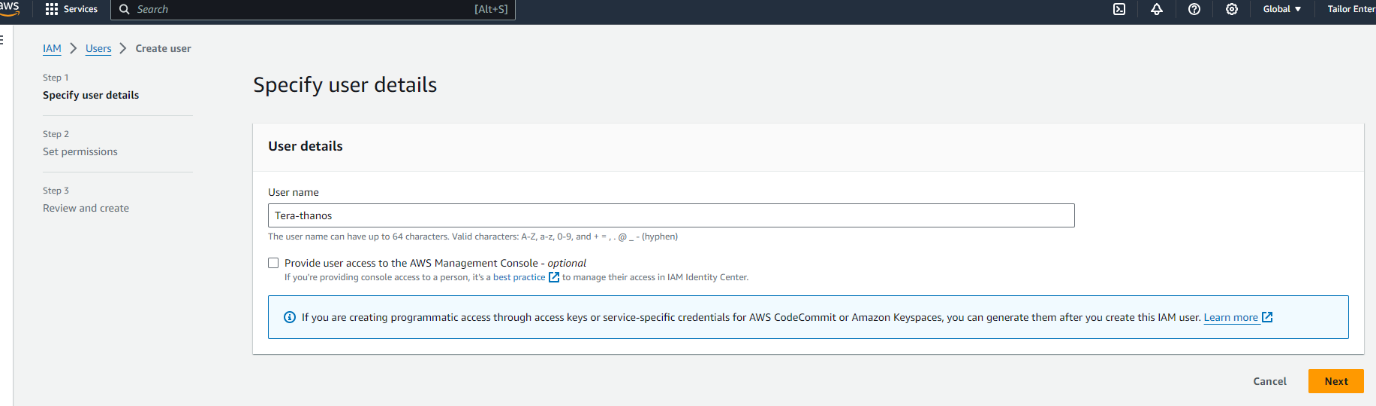

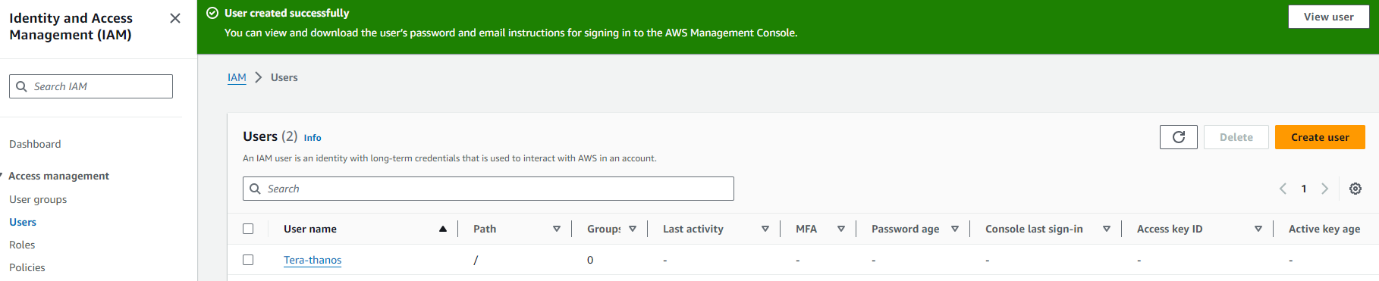

2. Okay Now you need to go into your aws and create a user and aws cli key

- Log into your aws console

- Go to IAM

- Under users create a user called Terrform-thanos

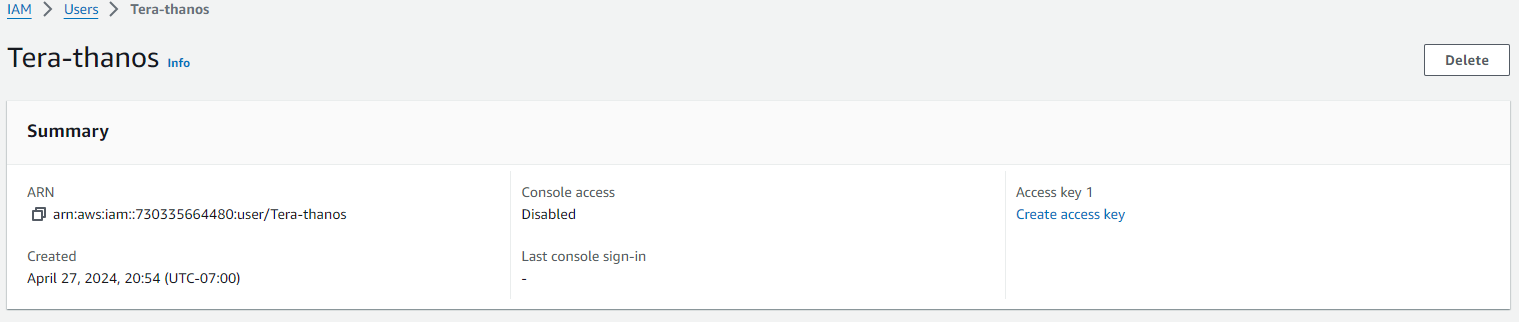

Next you want to either create a group or add it to an existing. To make things easy for now we are going to add it administrator group

Next click on the new user and create the ACCESS KEY

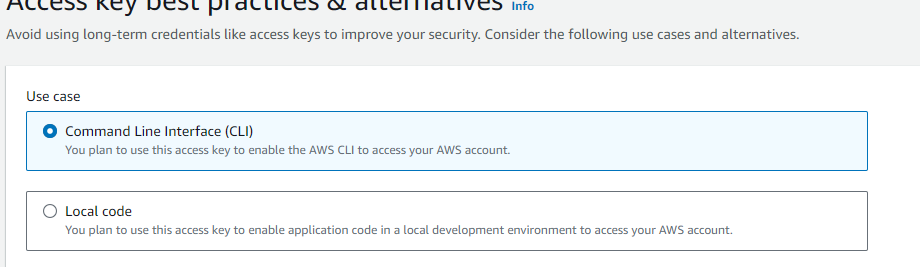

Next select the use case for the key

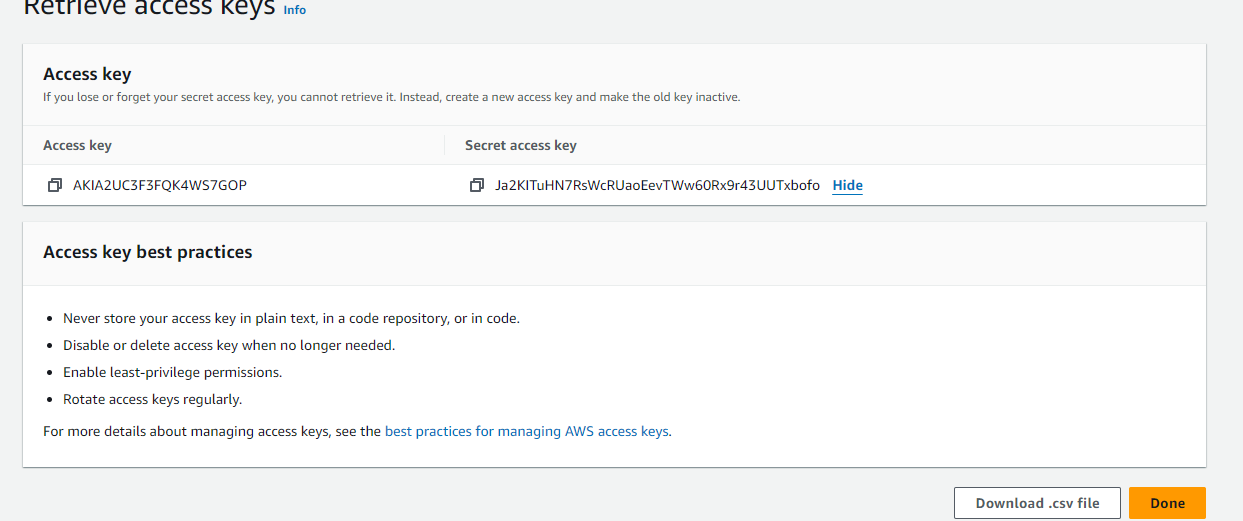

Once you create the ACCESS-KEY you will see the key and secret

Copy these to a text pad and save them somewhere safe.

Next you we going to create the RSA key pair

- Go under EC2 Dashboard

- Then Network & ecurity

- Then Key Pairs

- Create a new key pair and give it a name

Now configure your Terrform to use the credentials

AWS Access Key ID [****************RKFE]:

AWS Secret Access Key [****************aute]:

Default region name [us-west-1]:

Default output format [None]:

So a good terraform file structure to use in work environment would be

my-terraform-project/

├── main.tf

├── variables.tf

├── outputs.tf

├── provider.tf

├── modules/

│ ├── vpc/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ └── ec2/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

├── environments/

│ ├── dev/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ ├── prod/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

├── terraform.tfstate

├── terraform.tfvars

└── .gitignore

That said for the purposes of this post we will keep it simple. I will be adding separate posts to deploy vpc’s, autoscaling groups, security groups etc.

This would also be very easy to display if you VSC to connect to your

linux machine

|

mkdir myterraform cd myterraform touch main.tf outputs.tf variables.tf |

So we are going to create an Instance as follows

Main.tf

provider “aws” {

region = var.region

}

resource “aws_instance” “my_instance” {

ami = “ami-0827b6c5b977c020e“ # Use a valid AMI ID for your region

instance_type = “t2.micro“ # Free Tier eligible instance type

key_name = “” # Ensure this key pair is already created in your AWS account

subnet_id = “subnet-0e80683fe32a75513“ # Ensure this is a valid subnet in your VPC

vpc_security_group_ids = [“sg-0db2bfe3f6898d033“] # Ensure this is a valid security group ID

tags = {

Name = “thanos-lives”

}

root_block_device {

volume_type = “gp2“ # General Purpose SSD, which is included in the Free Tier

volume_size = 30 # Maximum size covered by the Free Tier

}

Outputs.tf

output “instance_ip_addr” {

value = aws_instance.my_instance.public_ip

description = “The public IP address of the EC2 instance.”

}

output “instance_id” {

value = aws_instance.my_instance.id

description = “The ID of the EC2 instance.”

}

output “first_security_group_id” {

value = tolist(aws_instance.my_instance.vpc_security_group_ids)[0]

description = “The first Security Group ID associated with the EC2 instance.”

}

Variables.tf

variable “region” {

description = “The AWS region to create resources in.”

default = “us-west-1”

}

variable “ami_id” {

description = “The AMI ID to use for the server.”

}

Terraform.tfsvars

region = “us-west-1”

ami_id = “ami-0827b6c5b977c020e“ # Replace with your chosen AMI ID

Deploying your code:

Initializing the backend…

Initializing provider plugins…

– Reusing previous version of hashicorp/aws from the dependency lock file

– Using previously-installed hashicorp/aws v5.47.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running “terraform plan” to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

thanosjumpbox:~/my-terraform$ terraform$

thanosjumpbox:~/my-terraform$ terraform$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_instance.my_instance will be created

+ resource “aws_instance” “my_instance” {

+ ami = “ami-0827b6c5b977c020e”

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ disable_api_stop = (known after apply)

+ disable_api_termination = (known after apply)

+ ebs_optimized = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ host_resource_group_arn = (known after apply)

+ iam_instance_profile = (known after apply)

+ id = (known after apply)

+ instance_initiated_shutdown_behavior = (known after apply)

+ instance_lifecycle = (known after apply)

+ instance_state = (known after apply)

+ instance_type = “t2.micro“

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = “nicktailor-aws”

+ monitoring = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ placement_partition_number = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ spot_instance_request_id = (known after apply)

+ subnet_id = “subnet-0e80683fe32a75513”

+ tags = {

+ “Name” = “Thanos-lives”

}

+ tags_all = {

+ “Name” = “Thanos-lives”

}

+ tenancy = (known after apply)

+ user_data = (known after apply)

+ user_data_base64 = (known after apply)

+ user_data_replace_on_change = false

+ vpc_security_group_ids = [

+ “sg-0db2bfe3f6898d033”,

]

+ root_block_device {

+ delete_on_termination = true

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ tags_all = (known after apply)

+ throughput = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 30

+ volume_type = “gp2”

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ first_security_group_id = “sg-0db2bfe3f6898d033”

+ instance_id = (known after apply)

+ instance_ip_addr = (known after apply)

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn’t use the -out option to save this plan, so Terraform can’t guarantee to take exactly these actions if you run “terraform

apply” now.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_instance.my_instance will be created

+ resource “aws_instance” “my_instance” {

+ ami = “ami-0827b6c5b977c020e”

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ disable_api_stop = (known after apply)

+ disable_api_termination = (known after apply)

+ ebs_optimized = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ host_resource_group_arn = (known after apply)

+ iam_instance_profile = (known after apply)

+ id = (known after apply)

+ instance_initiated_shutdown_behavior = (known after apply)

+ instance_lifecycle = (known after apply)

+ instance_state = (known after apply)

+ instance_type = “t2.micro“

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = “nicktailor-aws”

+ monitoring = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ placement_partition_number = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ spot_instance_request_id = (known after apply)

+ subnet_id = “subnet-0e80683fe32a75513”

+ tags = {

+ “Name” = “Thanos-lives”

}

+ tags_all = {

+ “Name” = “Thanos-lives”

}

+ tenancy = (known after apply)

+ user_data = (known after apply)

+ user_data_base64 = (known after apply)

+ user_data_replace_on_change = false

+ vpc_security_group_ids = [

+ “sg-0db2bfe3f6898d033”,

]

+ root_block_device {

+ delete_on_termination = true

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ tags_all = (known after apply)

+ throughput = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 30

+ volume_type = “gp2”

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ first_security_group_id = “sg-0db2bfe3f6898d033”

+ instance_id = (known after apply)

+ instance_ip_addr = (known after apply)

Do you want to perform these actions?

Terraform will perform the actions described above.

Only ‘yes’ will be accepted to approve.

Enter a value: yes

aws_instance.my_instance: Creating…

aws_instance.my_instance: Still creating… [10s elapsed]

aws_instance.my_instance: Still creating… [20s elapsed]

aws_instance.my_instance: Creation complete after 22s [id=i-0ee382e24ad28ecb8]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Outputs:

first_security_group_id = “sg-0db2bfe3f6898d033”

instance_id = “i-0ee382e24ad28ecb8”

instance_ip_addr = “50.18.90.217”

Result: