Category: Ansible

How to compare your route table isn’t missing any routes from your ansible config

REDHAT/CENTOS

Okay so those of you who use ansible like me and deal with complicated networks where they have a route list that’s a mile long on servers that you might need to migrate or copy to ansible and you want to save yourself some time and be accurate by ensuring the routes are correct and the file isn’t missing any routes as missing routes can be problematic and time consuming to troubleshoot after the fact.

Here is something cool you can do.

On your server you can

- On the client server

- You can use “ip” command with a flag r for routes

Example:

It will look look something like this.

[root@ansibleserver]# ip r default via 192.168.1.1 dev enp0s8 default via 10.0.2.2 dev enp0s3 proto dhcp metric 100 default via 192.168.1.1 dev enp0s8 proto dhcp metric 101 10.0.2.0/24 dev enp0s3 proto kernel scope link src 10.0.2.15 metric 100 192.168.1.0/24 dev enp0s8 proto kernel scope link src 192.168.1.12 metric 101 10.132.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 1011 10.132.10.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.136.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 1011 10.136.10.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.134.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 1011 10.133.10.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.127.10.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.122.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.134.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.181.100.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.181.100.0/24dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.247.200.0/24dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.172.300.0/24dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.162.100.0/24dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.161.111.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.161.0.0/16 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.233.130.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101 10.60.140.0/24 dev mgt proto kernel scope link src 10.16.110.1 metric 101

Now what you want to do is take the all the ips that show up on “mgt” interface and put them in a text file

- vi ips1

- save the file

copy on the section of one after the other in a column and save the file.

10.132.100.0/24

10.132.10.0/24

10.136.100.0/24

10.136.10.0/24

10.134.100.0/24

10.133.10.0/24

10.127.10.0/24

10.122.100.0/24

- Now your ansible route section will probably look something like this…

Example of ansible yaml file “ansblefile”

routes: - device: mgt gw: 10.16.110.1 route: - 10.132.100.0/24 - 10.132.10.0/24 - 10.136.100.0/24 - 10.136.10.0/24 - 10.134.100.0/24 - 10.133.10.0/24 - 10.127.10.0/24 - 10.122.100.0/24 - 10.134.100.0/24 - 10.181.100.0/24 - 10.181.100.0/24 - 10.247.200.0/24 - 10.172.300.0/24 - 10.162.100.0/24 - 10.161.111.0/24 - 10.161.0.0/16 - 10.233.130.0/24

- So you what you want to do now is copy and paste the routes from the file so they line up perfectly with the correct spacing in your yaml file.Note:

If they aren’t lined up correctly your playbook will fail. - So you can either copy them into a text editor like textpad or notepad++ and just use the replace function to add the “- “ (8 spaces before the – and 1 space before the – and ip) or you can you perl or sed script to do it right from the command line.

# If you want to edit the file in-place sed -i -e 's/^/prefix/' file

Example:

sed -e 's/^/ - /' ips1 > ips2

- Okay now you should have a new file called ips2 that looks like below with 8 space from the left margin.

– 10.136.100.0/24

– 10.136.10.0/24

– 10.134.100.0/24

– 10.133.10.0/24

– 10.127.10.0/24

– 10.122.100.0/24

- Now you if you cat that ips2

- cat ips2

- Then highlight everything inside the file

[highlighted] - 10.136.100.0/24 - 10.136.10.0/24 - 10.134.100.0/24 - 10.133.10.0/24 - 10.127.10.0/24 - 10.122.100.0/24 [highlighted]

7. Open your ansible yaml that contains the route section and just below “route:” right against the margin paste what you highlighted. Everything should line up perfectly and save the ansible file.

routes:

– device: mgt

gw: 10.16.110.1

route:

[paste highlight]

- 10.132.100.0/24 - 10.132.10.0/24 - 10.136.100.0/24 - 10.136.10.0/24 - 10.134.100.0/24 - 10.133.10.0/24

[paste highlight]

Okay no we need to check to ensure that you didn’t accidently miss any routes between the route table and inside your ansible yaml.

- Now with the original ips1 file with just the routes table without the –

- Make sure the ansible yaml file and the ips1 file are inside the same directory to make life easier.

- We can run a little compare script like so

while read a b c d e; do if [[ $(grep -w $a ansiblefile) ]]; then :; else echo $a $b $c $d $e; fi ; done < <(cat ips1)

Note:

If there are any routes missing from the ansible file it will spit them out. You can keep running this until the list shows no results, minus any gateway ips of course.

Example:

[root@ansibleserver]# while read a b c d e; do if [[ $(grep -w $a ansiblefile) ]]; then:; else echo $a $b $c $d $e; fi ; done < <(cat ips1)

10.168.142.0/24

10.222.100.0/24

10.222.110.0/24

By Nick Tailor

How to deploy wazuh-agent with Ansible

Note: For windows ports 5986 and 1515 must be open along with configureansiblescript.ps(powershell script) must have been setup for ansible to be able to communicate and deploy the wazuh-agent to windows machines.

In order to deploy the wazuh-agent to a large group of servers that span windows, ubuntu, centos type distros with ansible. Some tweaks need to be made on the wazuh manager and ansible server

This is done on the wazuh-manager server

/var/ossec/etc/ossec.conf – inside this file the following need to be edited for registrations to have the proper ip of the hosts being registered

<auth>

<disabled>no</disabled>

<port>1515</port>

<use_source_ip>yes</use_source_ip>

<force_insert>yes</force_insert>

<force_time>0</force_time>

<purge>yes</purge>

<use_password>yes</use_password>

<limit_maxagents>no</limit_maxagents>

<ciphers>HIGH:!ADH:!EXP:!MD5:RC4:3DES:!CAMELLIA:@STRENGTH</ciphers>

<!– <ssl_agent_ca></ssl_agent_ca> –>

<ssl_verify_host>no</ssl_verify_host>

<ssl_manager_cert>/var/ossec/etc/sslmanager.cert</ssl_manager_cert>

<ssl_manager_key>/var/ossec/etc/sslmanager.key</ssl_manager_key>

<ssl_auto_negotiate>yes</ssl_auto_negotiate>

</auth>

To enable authd on wazuh-manager

Now on your ansible server

wazuh_managers:

– address: 10.79.240.160

port: 1514

protocol: tcp

api_port: 55000

api_proto: ‘http’

api_user: null

wazuh_profile: null

wazuh_auto_restart: ‘yes’

wazuh_agent_authd:

enable: true

port: 1515

Next section in main.yml

openscap:

disable: ‘no’

timeout: 1800

interval: ‘1d’

scan_on_start: ‘yes’

# We recommend the use of Ansible Vault to protect Wazuh, api, agentless and authd credentials.

authd_pass: ‘password’

Test communication to windows machines via ansible run the following from /etc/ansible

How to run he playbook on linux machines, run from /etc/ansible/playbook/

How to run playbook on windows

Ansible playbook-roles-tasks breakdown

:/etc/ansible/playbooks# cat wazuh-agent.ymlplaybook file

– hosts: all:!wazuh-manager

roles:

– ansible-wazuh-agentroles that is called

vars:

wazuh_managers:

– address: 192.168.10.10

port: 1514

protocol: udp

api_port: 55000

api_proto: ‘http’

api_user: ansible

wazuh_agent_authd:

enable: true

port: 1515

ssl_agent_ca: null

ssl_auto_negotiate: ‘no

Roles: ansible-wazuh-agent

:/etc/ansible/roles/ansible-wazuh-agent/tasks# cat Linux.yml

—

– import_tasks: “RedHat.yml”

when: ansible_os_family == “RedHat”

– import_tasks: “Debian.yml”

when: ansible_os_family == “Debian”

– name: Linux | Install wazuh-agent

become: yes

package: name=wazuh-agent state=present

async: 90

poll: 15

tags:

– init

– name: Linux | Check if client.keys exists

become: yes

stat: path=/var/ossec/etc/client.keys

register: check_keys

tags:

– config

This task I added. If the client.keys file exists the registration on linux simply skips over when the playbook runs. You may want to disable this later, however when deploying to new machines probably best to have it active

– name: empty client key file

become: yes

command: rm -f /var/ossec/etc/client.keys

command: touch /var/ossec/etc/client.keys

– name: Linux | Agent registration via authd

block:

– name: Retrieving authd Credentials

include_vars: authd_pass.yml

tags:

– config

– authd

– name: Copy CA, SSL key and cert for authd

copy:

src: “{{ item }}”

dest: “/var/ossec/etc/{{ item | basename }}”

mode: 0644

with_items:

– “{{ wazuh_agent_authd.ssl_agent_ca }}”

– “{{ wazuh_agent_authd.ssl_agent_cert }}”

– “{{ wazuh_agent_authd.ssl_agent_key }}”

tags:

– config

– authd

when:

– wazuh_agent_authd.ssl_agent_ca is not none

This section below is the most important section as this what registers the machine to wazuh, if this section is skipped its usually due to client.keys file. I have made adjustments from the original git repository as I found it had some issues.

– name: Linux | Register agent (via authd)

shell: >

/var/ossec/bin/agent-auth

-m {{ wazuh_managers.0.address }}

-p {{ wazuh_agent_authd.port }}

{% if authd_pass is defined %}-P {{ authd_pass }}{% endif %}

{% if wazuh_agent_authd.ssl_agent_ca is not none %}

-v “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_ca | basename }}”

-x “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_cert | basename }}”

-k “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_key | basename }}”

{% endif %}

{% if wazuh_agent_authd.ssl_auto_negotiate == ‘yes’ %}-a{% endif %}

become: yes

register: agent_auth_output

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

tags:

– config

– authd

– name: Linux | Verify agent registration

shell: echo {{ agent_auth_output }} | grep “Valid key created”

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

tags:

– config

– authd

when: wazuh_agent_authd.enable == true

– name: Linux | Agent registration via rest-API

block:

– name: Retrieving rest-API Credentials

include_vars: api_pass.yml

tags:

– config

– api

– name: Linux | Create the agent key via rest-API

uri:

url: “{{ wazuh_managers.0.api_proto }}://{{ wazuh_managers.0.address }}:{{ wazuh_managers.0.api_port }}/agents/”

validate_certs: no

method: POST

body: {“name”:”{{ inventory_hostname }}”}

body_format: json

status_code: 200

headers:

Content-Type: “application/json”

user: “{{ wazuh_managers.0.api_user }}”

password: “{{ api_pass }}”

register: newagent_api

changed_when: newagent_api.json.error == 0

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

become: no

tags:

– config

– api

– name: Linux | Retieve new agent data via rest-API

uri:

url: “{{ wazuh_managers.0.api_proto }}://{{ wazuh_managers.0.address }}:{{ wazuh_managers.0.api_port }}/agents/{{ newagent_api.json.data.id }}”

validate_certs: no

method: GET

return_content: yes

user: “{{ wazuh_managers.0.api_user }}”

password: “{{ api_pass }}”

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

– newagent_api.json.error == 0

register: newagentdata_api

delegate_to: localhost

become: no

tags:

– config

– api

– name: Linux | Register agent (via rest-API)

command: /var/ossec/bin/manage_agents

environment:

OSSEC_ACTION: i

OSSEC_AGENT_NAME: ‘{{ newagentdata_api.json.data.name }}’

OSSEC_AGENT_IP: ‘{{ newagentdata_api.json.data.ip }}’

OSSEC_AGENT_ID: ‘{{ newagent_api.json.data.id }}’

OSSEC_AGENT_KEY: ‘{{ newagent_api.json.data.key }}’

OSSEC_ACTION_CONFIRMED: y

register: manage_agents_output

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

– newagent_api.changed

tags:

– config

– api

notify: restart wazuh-agent

when: wazuh_agent_authd.enable == false

– name: Linux | Vuls integration deploy (runs in background, can take a while)

command: /var/ossec/wodles/vuls/deploy_vuls.sh {{ ansible_distribution|lower }} {{ ansible_distribution_major_version|int }}

args:

creates: /var/ossec/wodles/vuls/config.toml

async: 3600

poll: 0

when:

– wazuh_agent_config.vuls.disable != ‘yes’

– ansible_distribution == ‘Redhat’ or ansible_distribution == ‘CentOS’ or ansible_distribution == ‘Ubuntu’ or ansible_distribution == ‘Debian’ or ansible_distribution == ‘Oracle’

tags:

– init

– name: Linux | Installing agent configuration (ossec.conf)

become: yes

template: src=var-ossec-etc-ossec-agent.conf.j2

dest=/var/ossec/etc/ossec.conf

owner=root

group=ossec

mode=0644

notify: restart wazuh-agent

tags:

– init

– config

– name: Linux | Ensure Wazuh Agent service is restarted and enabled

become: yes

service:

name: wazuh-agent

enabled: yes

state: restarted

– import_tasks: “RMRedHat.yml”

when: ansible_os_family == “RedHat”

– import_tasks: “RMDebian.yml”

when: ansible_os_family == “Debian”

Windows- tasks

Note: This section only works if your ansible is configured to communicate with Windows machines. It requires that port 5986 from ansible to windows is open and then port 1515 from the window machine to the wazuh-manager is open.

Problems: When using authd and Kerberos for windows ensure you have the host name listed in /etc/hosts on the ansible server to help alleviate agent deployment issues. Its script does not seem to handle well when you have more than 5 or 6 clients at a time at least in my experience.

Either I had to rejoint the windows machine to the domain or remove the client.keys file. I have updated this task to include the task to remove the client.keys file before it check to see if it exists. You do need to play with it a bit sometimes. I have also added a section that adds the wazuh-agent as a service and restarts it upon deployment as I found it sometimes skipped this entirely.

:/etc/ansible/roles/ansible-wazuh-agent/tasks# cat Windows.yml

—

– name: Windows | Get current installed version

win_shell: “{{ wazuh_winagent_config.install_dir }}ossec-agent.exe -h”

args:

removes: “{{ wazuh_winagent_config.install_dir }}ossec-agent.exe”

register: agent_version

failed_when: False

changed_when: False

– name: Windows | Check Wazuh agent version installed

set_fact: correct_version=true

when:

– agent_version.stdout is defined

– wazuh_winagent_config.version in agent_version.stdout

– name: Windows | Downloading windows Wazuh agent installer

win_get_url:

dest: C:\wazuh-agent-installer.msi

url: “{{ wazuh_winagent_config.repo }}wazuh-agent-{{ wazuh_winagent_config.version }}-{{ wazuh_winagent_config.revision }}.msi”

when:

– correct_version is not defined

– name: Windows | Verify the downloaded Wazuh agent installer

win_stat:

path: C:\wazuh-agent-installer.msi

get_checksum: yes

checksum_algorithm: md5

register: installer_md5

when:

– correct_version is not defined

failed_when:

– installer_md5.stat.checksum != wazuh_winagent_config.md5

– name: Windows | Install Wazuh agent

win_package:

path: C:\wazuh-agent-installer.msi

arguments: APPLICATIONFOLDER={{ wazuh_winagent_config.install_dir }}

when:

– correct_version is not defined

This section was added. If it was present registrations would skip

– name: Remove a file, if present

win_file:

path: C:\wazuh-agent\client.keys

state: absent

This section was added for troubleshooting purposes

#- name: Touch a file (creates if not present, updates modification time if present)

# win_file:

# path: C:\wazuh-agent\client.keys

# state: touch

– name: Windows | Check if client.keys exists

win_stat: path=”{{ wazuh_winagent_config.install_dir }}client.keys”

register: check_windows_key

notify: restart wazuh-agent windows

tags:

– config

– name: Retrieving authd Credentials

include_vars: authd_pass.yml

tags:

– config

– name: Windows | Register agent

win_shell: >

{{ wazuh_winagent_config.install_dir }}agent-auth.exe

-m {{ wazuh_managers.0.address }}

-p {{ wazuh_agent_authd.port }}

{% if authd_pass is defined %}-P {{ authd_pass }}{% endif %}

args:

chdir: “{{ wazuh_winagent_config.install_dir }}”

register: agent_auth_output

notify: restart wazuh-agent windows

when:

– wazuh_agent_authd.enable == true

– check_windows_key.stat.exists == false

– wazuh_managers.0.address is not none

tags:

– config

– name: Windows | Installing agent configuration (ossec.conf)

win_template:

src: var-ossec-etc-ossec-agent.conf.j2

dest: “{{ wazuh_winagent_config.install_dir }}ossec.conf”

notify: restart wazuh-agent windows

tags:

– config

– name: Windows | Delete downloaded Wazuh agent installer file

win_file:

path: C:\wazuh-agent-installer.msi

state: absent

These section was added as the service sometimes was not created and the agent was not restarted upon deployment which resulted in a non active client In kibana

– name: Create a new service

win_service:

name: wazuh-agent

path: C:\wazuh-agent\ossec-agent.exe

– name: Windows | Wazuh-agent Restart

win_service:

name: wazuh-agent

state: restarted

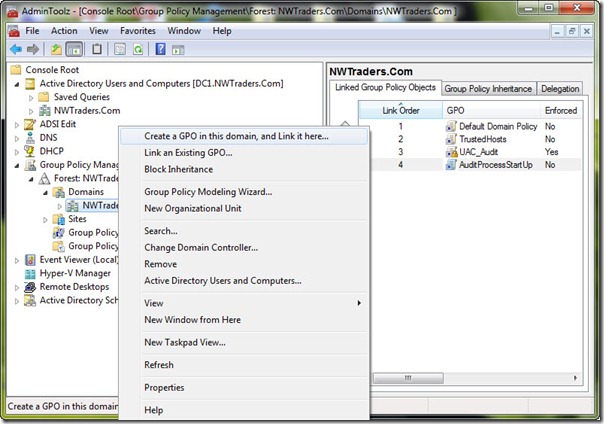

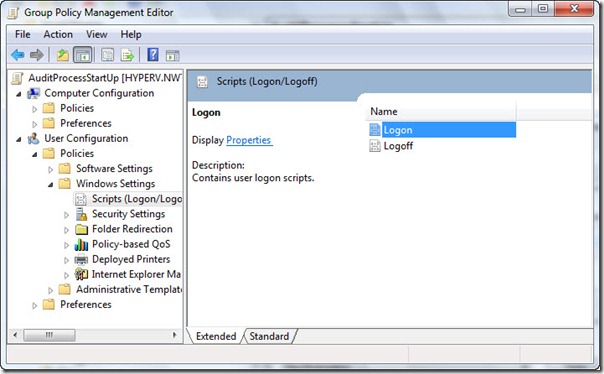

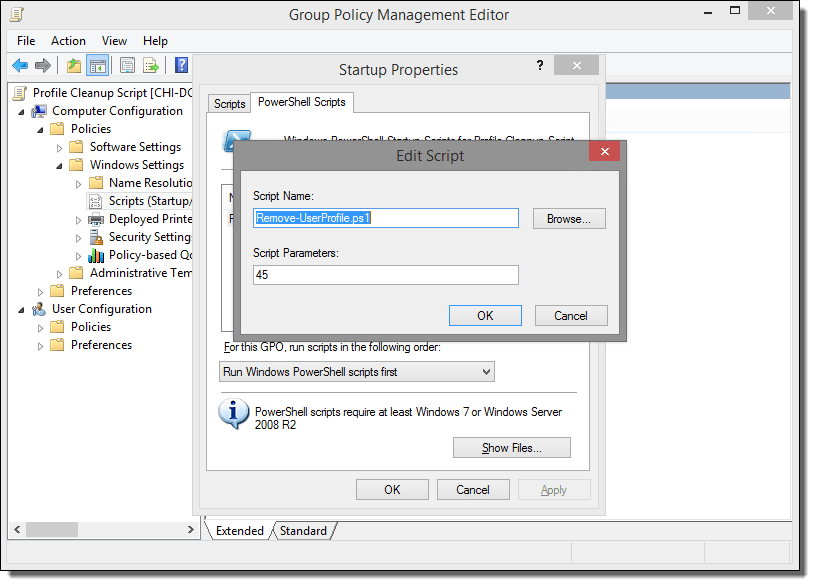

How to deploy ansibleconfigure powershell script on windows

Okay fun stuff, so I tried this a number of ways which I will describe in this blog post.

So if your windows server is joined to the domain and you have a machine that can reach all he virtual machines, WinRM is configured, and you have powershell 3.0 or higher setup.

Then you could try the following powershell for loop from SYSVOL share

Sample powershell For Loop

powershell loop deploy – ask credentials

$serverfiles=import-CSV ‘d:\scripts\hosts.csv’

$cred = get-credential

Foreach ($server in $serverfiles) {

write-output $server.names

invoke-command -computername $server.names -filepath d:\scripts\ansibleconfigure.ps1 -credential $cred

}

Note: This method sucked and failed for me due to WinRM not being there and other restrictions like host having. The other was I’m not exactly powershell intermediate had to muddle around a lot.

What you want to do here is copy the configure script to SYSVOL so all the joined machines can reach the script.

In the search bar type: (replace domain to match)

script name

How to configure Ansible to manage Windows Hosts on Ubuntu 16.04

Note: This section assumes you already have ansible installed, working, active directory setup, and test windows host in communication with AD. Although its not needed to have AD. Its good practice for to have it all setup talking to each other for learning.

Setup

Now Ansible does not come with windows managing ability out of the box. Its is easier to setup on centos as the packages are better maintained on Redhat distros. However if you want to set it up on Ubuntu here is what you need to do.

Next Setup your /etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = HOME.NICKTAILOR.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

HOME.NICKTAILOR.COM = {

kdc = HOME.NICKTAILOR.COM

admin_server = HOME.NICKTAILOR.COM

}

[domain_realm]

.home.nicktailor.com = HOME.NICKTAILOR.COM

home.nicktailor.com = HOME.NICKTAILOR.COM

Test Kerberos

Run the following commands to test Kerberos:

kinit administrator@HOME.NICKTAILOR.COM <–make sure you do this exact case sensitive or your authenication will fail. Also the user has to have domain admin privileges.

You will be prompted for the administrator password klist

You should see a Kerberos KEYRING record.

[root@localhost win_playbooks]# klist

Ticket cache: FILE:/tmp/krb5cc_0Default principal: administrator@HOME.NICKTAILOR.COM

Valid starting Expires Service principal05/23/2018 14:20:50 05/24/2018 00:20:50 krbtgt/HOME.NICKTAILOR.COM@HOME.NICKTAILOR.COM renew until 05/30/2018 14:20:40

Configure Ansible

Ansible is complex and is sensitive to the environment. Troubleshooting an environment which has never initially worked is complex and confusing. We are going to configure Ansible with the least complex possible configuration. Once you have a working environment, you can make extensions and enhancements in small steps.

The core configuration of Ansible resides at /etc/ansible

We are only going to update two files for this exercise.

Update the Ansible Inventory file

Edit /etc/ansible/hosts and add:

[windows]

HOME.NICKTAILOR.COM

“[windows]” is a created group of servers called “windows”. In reality this should be named something more appropriate for a group which would have similar configurations, such as “Active Directory Servers”, or “Production Floor Windows 10 PCs”, etc.

Update the Ansible Group Variables for Windows

Ansible Group Variables are variable settings for a specific inventory group. In this case, we will create the group variables for the “windows” servers created in the /etc/ansible/hosts file.

Create /etc/ansible/group_vars/windows and add:

—

ansible_user: Administrator

ansible_password: Abcd1234

ansible_port: 5986

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

This is a YAML configuration file, so make sure the first line is three dashes “‐‐‐”

Naturally change the Administrator password to the password for WinServer1.

For best practices, Ansible can encrypt this file into the Ansible Vault. This would prevent the password from being stored here in clear text. For this lab, we are attempting to keep the configuration as simple as possible. Naturally in production this would not be appropriate.

The powershell script must be run on the windows client in order for ansible to be table to talk to the host without issues.

Configure Windows Servers to Manage

To configure the Windows Server for remote management by Ansible requires a bit of work. Luckily the Ansible team has created a PowerShell script for this. Download this script from [here] to each Windows Server to manage and run this script as Administrator.

Loginto WinServer1 as Administrator, download ConfigureRemotingForAnsible.ps1 and run this PowerShell script without any parameters.Once this command has been run on the WinServer1, return to the Ansible1 Controller host.

Test Connectivity to the Windows Server

If all has gone well, we should be able to perform an Ansible PING test command. This command will simply connect to the remote WinServer1 server and report success or failure.

Type:

ansible windows -m win_ping

This command runs the Ansible module “win_ping” on every server in the “windows” inventory group.

Type: ansible windows -m setup to retrieve a complete configuration of Ansible environmental settings.

Type: ansible windows -c ipconfig

If this command is successful, the next steps will be to build Ansible playbooks to manage Windows Servers.

Managing Windows Servers with Playbooks

Let’s create some playbooks and test Ansible for real on Windows systems.

Create a folder on Ansible1 for the playbooks, YAML files, modules, scripts, etc. For these exercises we created a folder under /root called win_playbooks.

Ansible has some expectations on the directory structure where playbooks reside. Create the library and scripts folders for use later in this exercise.

Commands:

cd /root

mkdir win_playbooks

mkdir win_playbooks/library

mkdir win_playbooks/scripts

Create the first playbook example “netstate.yml”

The contents are:

– name: test cmd from win_command module

hosts: windows

tasks:

– name: run netstat and return Ethernet stats

win_command: netstat -e

register: netstat

– debug: var=netstat

This playbook does only one task, to connect to the servers in the Ansible inventory group “windows” and run the command netstat.exe -a and return the results.

To run this playbook, run this command on Ansible1:

Errors that I ran into

Now on ubuntu you might get some SSL error when trying to run a playbook. This is because the python libraries are trying to verify the self signed cert before opening a secure connection via https.

ansible windows -m win_ping

Wintestserver1 | UNREACHABLE! => {

“changed”: false,

“msg“: “ssl: 500 WinRMTransport. [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)”,

“unreachable”: true

}

.

How you can get around the is update the python library to not care about looking for a valid cert and just open a secure connection.

Edit /usr/lib/python2.7/sitecustomize.py

——————–

import ssl

try:

_create_unverified_https_context = ssl._create_unverified_context

except AttributeError:

# Legacy Python that doesn’t verify HTTPS certificates by default

pass

else:

# Handle target environment that doesn’t support HTTPS verification

ssl._create_default_https_context = _create_unverified_https_context

——————————–

Then it should look like this

ansible windows -m win_ping

wintestserver1 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

Proxies and WSUS:

If you are using these you to disable proxies check on your host simply export

export no_proxy=127.0.0.1, winserver1, etc,

Or add a file in /etc/profile.d/whatever.sh

If you have WSUS configured you will need to check to see if there are updates from there or they will not show when the yaml searches for new updates.

Test windows updates yaml: The formatting is all wrong below so click on the link and it will have the proper formatted yaml for windows update.

—

– hosts: windows

gather_facts: no

tasks:

– name: Search Windows Updates

win_updates:

category_names:

– SecurityUpdates

– CriticalUpdates

– UpdateRollups

– Updates

state: searched

log_path: C:\ansible_wu.txt

– name: Install updates

win_updates:

category_names:

– SecurityUpdates

– CriticalUpdates

– UpdateRollups

– Updates

If it works properly the log file on the test host will have something like the following: C:\ansible_wu.txt

Logs show the update

2018-06-04 08:47:54Z Creating Windows Update session…

2018-06-04 08:47:54Z Create Windows Update searcher…

2018-06-04 08:47:54Z Search criteria: (IsInstalled = 0 AND CategoryIds contains ‘0FA1201D-4330-4FA8-8AE9-B877473B6441’) OR(IsInstalled = 0 AND CategoryIds contains ‘E6CF1350-C01B-414D-A61F-263D14D133B4′) OR(IsInstalled = 0 AND CategoryIds contains ’28BC880E-0592-4CBF-8F95-C79B17911D5F’) OR(IsInstalled = 0 AND CategoryIds contains ‘CD5FFD1E-E932-4E3A-BF74-18BF0B1BBD83’)

2018-06-04 08:47:54Z Searching for updates to install in category Ids 0FA1201D-4330-4FA8-8AE9-B877473B6441 E6CF1350-C01B-414D-A61F-263D14D133B4 28BC880E-0592-4CBF-8F95-C79B17911D5F CD5FFD1E-E932-4E3A-BF74-18BF0B1BBD83…

2018-06-04 08:48:33Z Found 2 updates

2018-06-04 08:48:33Z Creating update collection…

2018-06-04 08:48:33Z Adding update 67a00639-09a1-4c5f-83ff-394e7601fc03 – Security Update for Windows Server 2012 R2 (KB3161949)

2018-06-04 08:48:33Z Adding update ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf – Security Update for Windows Server 2012 R2 (KB3162343)

2018-06-04 08:48:33Z Calculating pre-install reboot requirement…

2018-06-04 08:48:33Z Check mode: exiting…

2018-06-04 08:48:33Z Return value:

{

“updates”: {

“67a00639-09a1-4c5f-83ff-394e7601fc03”: {

“title”: “Security Update for Windows Server 2012 R2 (KB3161949)”,

“id”: “67a00639-09a1-4c5f-83ff-394e7601fc03”,

“installed”: false,

“kb”: [

“3161949”

]

},

“ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf”: {

“title”: “Security Update for Windows Server 2012 R2 (KB3162343)”,

“id”: “ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf”,

“installed”: false,

“kb”: [

“3162343”

]

}

},

“found_update_count”: 2,

“changed”: false,

“reboot_required”: false,

“installed_update_count”: 0,

“filtered_updates”: {

}

}

Written By Nick Tailor

How to setup Anisble on Ubuntu 16.04

Installation

Type the following apt-get command or apt command:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install software-properties-common

Next add ppa:ansible/ansible to your system’s Software Source:

$ sudo apt-add-repository ppa:ansible/ansible

| Ansible is a radically simple IT automation platform that makes your applications and systems easier to deploy. Avoid writing scripts or custom code to deploy and update your applications— automate in a language that approaches plain English, using SSH, with no agents to install on remote systems.

http://ansible.com/ More info: https://launchpad.net/~ansible/+archive/ubuntu/ansible Press [ENTER] to continue or Ctrl-c to cancel adding it. gpg: keybox ‘/tmp/tmp6t9bsfxg/pubring.gpg’ created gpg: /tmp/tmp6t9bsfxg/trustdb.gpg: trustdb created gpg: key 93C4A3FD7BB9C367: public key “Launchpad PPA for Ansible, Inc.” imported gpg: Total number processed: 1 gpg: imported: 1 OK |

Update your repos:

$ sudo apt-get update

Sample outputs:

To install the latest version of ansible, enter:

| Ign:1 http://dl.google.com/linux/chrome/deb stable InRelease

Hit:2 http://dl.google.com/linux/chrome/deb stable Release Get:4 http://in.archive.ubuntu.com/ubuntu artful InRelease [237 kB] Hit:5 http://security.ubuntu.com/ubuntu artful-security InRelease Get:6 http://ppa.launchpad.net/ansible/ansible/ubuntu artful InRelease [15.9 kB] Get:7 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main amd64 Packages [560 B] Get:8 http://in.archive.ubuntu.com/ubuntu artful-updates InRelease [65.4 kB] Hit:9 http://in.archive.ubuntu.com/ubuntu artful-backports InRelease Get:10 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main i386 Packages [560 B] Get:11 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main Translation-en [340 B] Fetched 319 kB in 5s (62.3 kB/s) Reading package lists… Done |

$ sudo apt-get install ansible

Type the following command:Finding out Ansible version

$ ansible –version

Sample outputs:

| ansible 2.4.0.0

config file = /etc/ansible/ansible.cfg configured module search path = [u’/home/vivek/.ansible/plugins/modules’, u’/usr/share/ansible/plugins/modules’] ansible python module location = /usr/lib/python2.7/dist-packages/ansible executable location = /usr/bin/ansible python version = 2.7.14 (default, Sep 23 2017, 22:06:14) [GCC 7.2.0] |

Creating your hosts file

Ansible needs to know your remote server names or IP address. This information is stored in a file called hosts. The default is /etc/ansible/hosts. You can edit this one or create a new one in your $HOME directory:

$ sudo vi /etc/ansible/hosts

Or

$ vi $HOME/hosts

Append your server’s DNS or IP address:

[webservers]

server1.nicktailor.com

192.168.0.21

192.168.0.25

[devservers]

192.168.0.22

192.168.0.23

192.168.0.24

I have two groups. The first one named as webserver and other is called devservers.

Setting up ssh keys

You must configure ssh keys between your machine and remote servers specified in ~/hosts file:

$ ssh-keygen -t rsa -b 4096 -C “My ansisble key”

Use scp or ssh-copy-id command to copy your public key file (e.g., $HOME/.ssh/id_rsa.pub) to your account on the remote server/host:

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@server1.cyberciti.biz

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.22

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.23

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.24

$ eval $(ssh-agent)

$ ssh-add

Now ansible can talk to all remote servers using ssh command.

Send ping server to all servers

Just type the following command:

$ ansible -i ~/hosts -m ping all

Sample outputs:

192.168.0.22 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

192.168.0.23 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

192.168.0.24 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

Find out uptime for all hosts

$ ansible -i hosts -m shell -a ‘uptime’ all

Sample outputs:

| do-de.public | SUCCESS | rc=0

10:37:02 up 1 day, 8:39, 1 user, load average: 0.95, 0.27, 0.12

do-blr-vpn | SUCCESS | rc=0 16:07:11 up 1 day, 8:43, 1 user, load average: 0.01, 0.01, 0.00

ln.gfs01 | SUCCESS | rc=0 10:37:17 up 22 days, 5:30, 1 user, load average: 0.18, 0.12, 0.05 |

Where,

- -i ~/hosts: Specify inventory host path. You can setup shell variable and skip the -i option. For e.g.: export ANSIBLE_HOSTS=~/hosts

- -m shell: Module name to execute such as shell, apt, yum and so on

- -a ‘uptime’: Module arguments. For example, shell module will accept Unix/Linux command names. The apt module will accept options to update remote boxes using apt-get/apt command and so on.

- all: The all means “all hosts.” You can speificy group name such as devservers (ansible -i hosts -m shell -a ‘uptime’ dbservers) or host names too.

Update all Debian/Ubuntu server using apt module

Run the following command:

$ ansible -i ~/hosts -m apt -a ‘update_cache=yes upgrade=dist’ dbservers

Writing your first playbook

You can combine all modules in a text file as follows in yml format i.e. create a file named update.yml:

—

– hosts: dbservers

tasks:

– name: Updating host using apt

apt:

update_cache: yes

upgrade: dist

Fig.01: Ansible playbook in actionNow you can run it as follows:

$ ansible-playbook -i ~/hosts update.yml

How to setup Ansible to manage Windows hosts with Centos 7

Note- This assumes you already have a out of the box ansible setup and a windows AD and windows test VM joined to the domain.

Install Prerequisite Packages on centos7 with ansible already installed

Use Yum to install the following packages.

Install GCC required for Kerberos

yum -y group install “Development Tools”

Install EPEL

yum -y install epel-release

Install Ansible

yum -y install ansible

Install Kerberos

yum -y install python-devel krb5-devel krb5-libs krb5-workstation

Install Python PIP

yum -y install python-pip

Install BIND utilities for nslookup

yum -y install bind-utils

Bring all packages up to the latest version

yum -y update

Check that Ansible and Python is Installed

Run the commands:

ansible – – version | head -l 1

python – – version

The versions of Ansible and Python here are 2.4.2 and 2.7.5. Ansible is developing extremely rapidly so these instructions will likely change in the near future.

Configure Kerberos

There are other options than Kerberos, but Kerberos is generally the best option, though not the simplest.

Install the Kerberos wrapper:

pip install pywinrm[Kerberos]

Kerberos packages were installed previously which will have created /etc/krb5.conf

Edit /etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = HOME.NICKTAILOR.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

HOME.NICKTAILOR.COM = {

kdc = HOME.NICKTAILOR.COM

admin_server = HOME.NICKTAILOR.COM

}

[domain_realm]

.home.nicktailor.com = HOME.NICKTAILOR.COM

home.nicktailor.com = HOME.NICKTAILOR.COM

Test Kerberos

Run the following commands to test Kerberos:

kinit administrator@HOME.NICKTAILOR.COM <–make sure you do this exact case sensitive or your authenication will fail. Also the user has to have domain admin privileges.

You will be prompted for the administrator password klist

You should see a Kerberos KEYRING record.

[root@localhost win_playbooks]# klist

Ticket cache: FILE:/tmp/krb5cc_0Default principal: administrator@HOME.NICKTAILOR.COM

Valid starting Expires Service principal05/23/2018 14:20:50 05/24/2018 00:20:50 krbtgt/HOME.NICKTAILOR.COM@HOME.NICKTAILOR.COM renew until 05/30/2018 14:20:40

Configure Ansible

Ansible is complex and is sensitive to the environment. Troubleshooting an environment which has never initially worked is complex and confusing. We are going to configure Ansible with the least complex possible configuration. Once you have a working environment, you can make extensions and enhancements in small steps.

The core configuration of Ansible resides at /etc/ansible

We are only going to update two files for this exercise.

Update the Ansible Inventory file

Edit /etc/ansible/hosts and add:

[windows]

HOME.NICKTAILOR.COM

“[windows]” is a created group of servers called “windows”. In reality this should be named something more appropriate for a group which would have similar configurations, such as “Active Directory Servers”, or “Production Floor Windows 10 PCs”, etc.

Update the Ansible Group Variables for Windows

Ansible Group Variables are variable settings for a specific inventory group. In this case, we will create the group variables for the “windows” servers created in the /etc/ansible/hosts file.

Create /etc/ansible/group_vars/windows and add:

—

ansible_user: Administrator

ansible_password: Abcd1234

ansible_port: 5986

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

This is a YAML configuration file, so make sure the first line is three dashes “‐‐‐”

Naturally change the Administrator password to the password for WinServer1.

For best practices, Ansible can encrypt this file into the Ansible Vault. This would prevent the password from being stored here in clear text. For this lab, we are attempting to keep the configuration as simple as possible. Naturally in production this would not be appropriate.

Configure Windows Servers to Manage

To configure the Windows Server for remote management by Ansible requires a bit of work. Luckily the Ansible team has created a PowerShell script for this. Download this script from [here] to each Windows Server to manage and run this script as Administrator.

Log into WinServer1 as Administrator, download ConfigureRemotingForAnsible.ps1 and run this PowerShell script without any parameters.

Once this command has been run on the WinServer1, return to the Ansible1 Controller host.

Test Connectivity to the Windows Server

If all has gone well, we should be able to perform an Ansible PING test command. This command will simply connect to the remote WinServer1 server and report success or failure.

Type:

ansible windows -m win_ping

This command runs the Ansible module “win_ping” on every server in the “windows” inventory group.

Type: ansible windows -m setup to retrieve a complete configuration of Ansible environmental settings.

Type: ansible windows -c ipconfig

If this command is successful, the next steps will be to build Ansible playbooks to manage Windows Servers.

Managing Windows Servers with Playbooks

Let’s create some playbooks and test Ansible for real on Windows systems.

Create a folder on Ansible1 for the playbooks, YAML files, modules, scripts, etc. For these exercises we created a folder under /root called win_playbooks.

Ansible has some expectations on the directory structure where playbooks reside. Create the library and scripts folders for use later in this exercise.

Commands:

cd /root

mkdir win_playbooks

mkdir win_playbooks/library

mkdir win_playbooks/scripts

Create the first playbook example “netstate.yml”

The contents are:

– name: test cmd from win_command module

hosts: windows

tasks:

– name: run netstat and return Ethernet stats

win_command: netstat -e

register: netstat

– debug: var=netstat

This playbook does only one task, to connect to the servers in the Ansible inventory group “windows” and run the command netstat.exe -a and return the results.

To run this playbook, run this command on Ansible1:

ansible-playbook netstat_e.yml

How to setup ansible on centos 7

Prerequisites

To follow this tutorial, you will need:

Step 1 — Installing Ansible

To begin exploring Ansible as a means of managing our various servers, we need to install the Ansible software on at least one machine.

To get Ansible for CentOS 7, first ensure that the CentOS 7 EPEL repository is installed:

Once the repository is installed, install Ansible with yum:

We now have all of the software required to administer our servers through Ansible.

Step 2 — Configuring Ansible Hosts

Ansible keeps track of all of the servers that it knows about through a “hosts” file. We need to set up this file first before we can begin to communicate with our other computers.

Open the file with root privileges like this:

You will see a file that has a lot of example configurations commented out. Keep these examples in the file to help you learn Ansible’s configuration if you want to implement more complex scenarios in the future.

The hosts file is fairly flexible and can be configured in a few different ways. The syntax we are going to use though looks something like this:

Example hosts file

[group_name]

alias ansible_ssh_host=your_server_ip

The group_name is an organizational tag that lets you refer to any servers listed under it with one word. The alias is just a name to refer to that server.

Imagine you have three servers you want to control with Ansible. Ansible communicates with client computers through SSH, so each server you want to manage should be accessible from the Ansible server by typing:

You should not be prompted for a password. While Ansible certainly has the ability to handle password-based SSH authentication, SSH keys help keep things simple.

We will assume that our servers’ IP addresses are 192.168.0.1, 192.168.0.2, and 192.168.0.3. Let’s set this up so that we can refer to these individually as host1, host2, and host3, or as a group as servers. To configure this, you would add this block to your hosts file:

/etc/ansible/hosts

[servers]

host1 ansible_ssh_host=192.168.0.1

host2 ansible_ssh_host=192.168.0.2

host3 ansible_ssh_host=192.168.0.3

Hosts can be in multiple groups and groups can configure parameters for all of their members. Let’s try this out now.

Ansible will, by default, try to connect to remote hosts using your current username. If that user doesn’t exist on the remote system, a connection attempt will result in this error:

Ansible connection error

host1 | UNREACHABLE! => {

“changed”: false,

“msg“: “Failed to connect to the host via ssh.”,

“unreachable”: true

}

Let’s specifically tell Ansible that it should connect to servers in the “servers” group with the nick user. Create a directory in the Ansible configuration structure called group_vars.

Within this folder, we can create YAML-formatted files for each group we want to configure:

Add this code to the file:

/etc/ansible/group_vars/servers

—

ansible_ssh_user: ansiblenick

YAML files start with “—“, so make sure you don’t forget that part.

Save and close this file when you are finished. Now Ansible will always use the ansiblenick user for the servers group, regardless of the current user.

If you want to specify configuration details for every server, regardless of group association, you can put those details in a file at /etc/ansible/group_vars/all. Individual hosts can be configured by creating files under a directory at /etc/ansible/host_vars.

Step 3 — Using Simple Ansible Commands

Now that we have our hosts set up and enough configuration details to allow us to successfully connect to our hosts, we can try out our very first command.

Ping all of the servers you configured by typing:

Ansible will return output like this:

Output

host1 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

host3 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

host2 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

This is a basic test to make sure that Ansible has a connection to all of its hosts.

The -m ping portion of the command is an instruction to Ansible to use the “ping” module. These are basically commands that you can run on your remote hosts. The ping module operates in many ways like the normal ping utility in Linux, but instead it checks for Ansible connectivity.

The all portion means “all hosts.” You could just as easily specify a group:

You can also specify an individual host:

You can specify multiple hosts by separating them with colons:

The shell module lets us send a terminal command to the remote host and retrieve the results. For instance, to find out the memory usage on our host1 machine, we could use:

As you can see, you pass arguments into a script by using the -a switch. Here’s what the output might look like:

Output

host1 | SUCCESS | rc=0 >>

total used free shared buffers cached

Mem: 3954 227 3726 0 14 93

-/+ buffers/cache: 119 3834

Swap: 0 0 0