Author: admin

How to RDP to VNC and authenticate using AD (OpenSuSe)

For this we will be setting up VNC server and XRDP which allow you to use windows remote desktop terminal services client to connect to your linux desktop as you would any windows machine with centralized authentication using Active directory.

XRDP is a wonderful Remote Desktop protocol application that allows you to RDP to your servers/workstations from any Windows machine, MAC running an RDP app or even Linux using an RDP app such as Remmina.

Virtual Network Computing (VNC) is a graphical desktop sharing system that uses the Remote Frame Buffer protocol (RFB) to remotely control another computer. Essentially the Linux version of windows RDP.

Now since there was no xrdp package in the opensuse repository it was a bit of dirty install to get it all working.

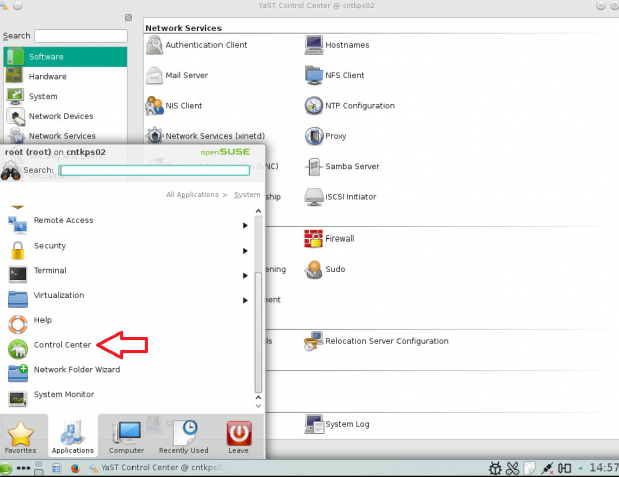

1. First you will need to install VNC Server using yast2

- Yast2 –I tigervnc

- Chkconfig vnc on

- Vncpasswd <enter>

- Type your vncpasswd twice

- systemctl start vnc (/usr/bin/vncserver)

Should like below

New ‘X’ desktop is bvanhm01:1

Starting applications specified in /root/.vnc/xstartup

Log file is /root/.vnc/nicktailor.1:1.log

Now we want to install xrdp. Since Opensuse doesn’t come with a built in repository or rpm that has xrdp lalready compiled to use. We will have to make this setup just a little dirty and compile our own xrdp and then configure it to work the VNC. Im not 100% sure if there is one, however when I looked for one I didn’t see one so I chose this route which worked out. However it is a bit of a dirty setup

First we need to download xrdp source

- Create a directory to store it the source files

- cp xrdp-v0.6.1.tar.gz /home/xrdp

- tar –zxvf /home/xrdp/xrdp-v0.6.1.tar.gz

- zypper install git autoconf automake libtool make gcc gcc-c++ libX11-devel libXfixes-devel libXrandr-devel fuse-devel patch flex bison intltool libxslt-tools perl-libxml-perl font-util libxml2-devel openssl-devel pam-devel python-libxml2 xorg-11

- You will also want to enable remote desktop services inside opensuse

- Now you want to install xrdp

- change to the xrdp directory and run

- ./bootstrap

- ./configure

- make

- then as root

- make install

2. Once the application is installed you will need to add the library files so the system can read it

- vi /etc/ld.so.conf

- add the following lines(32bit & 64bit):

- /usr/local/lib64/xrdp

- /usr/local/lib/xrdp

- save the file

- next run ldconfig so the system pick the libraries directories up.

- Make sure your /etc/xrdp/xrdp.ini has the following

[globals]

bitmap_cache=yes

bitmap_compression=yes

port=3389

crypt_level=high

channel_code=1

[xrdp1]

name=sesman-Xvnc

lib=libvnc.so

username=ask

password=ask

ip=127.0.0.1

port=-1

- Your start up script for xrdp lives inside /home/xrdp/xrdp-v0.6.1/instfiles/xrdp.sh

- cd in /etc/init.d/

- You can create a symlink inside /etc/init.d/

- ln -s /home/xrdp/xrdp-v0.6.1/instfiles/xrdp.sh xrdp.sh

- Now I added the start up script to /etc/rc.d/boot.local so that it would start up on reboots

- Add this line

- /home/xrdp/xrdp-v0.6.1/instfiles/xrdp.sh start

- Add this line

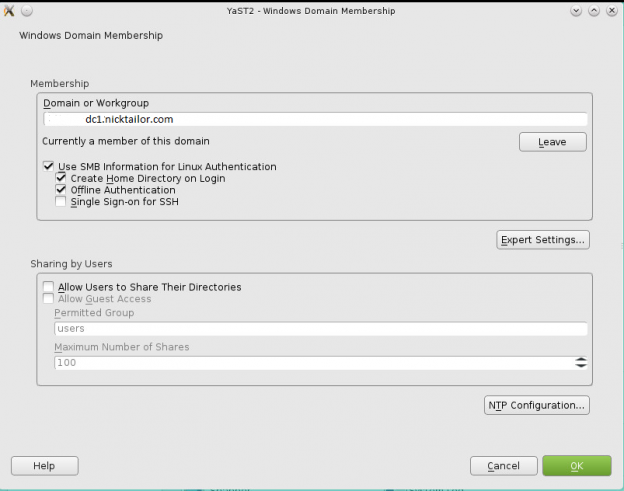

For the next portion please ensure you opensuse is already added to AD and authenticating against AD. If not please refer to my earlier blog post on how to add opensuse to Active Directory. If you did everything correctly your pam.d authentication will be using pam_winbind to authenticate against AD and the following includes will use that authentication process for xrdp to get to VNC

- Now in order to get xrdp to use AD authentication you will need to update the /etc/pam.d/xrdp-sesman

#%PAM-1.0

auth include common-auth

account include common-account

password include common-password

session include common-session

ISSUES YOU CAN RUN INTO WITH GNOME

- So now you should be in theory be able to use remote desktop provided there is no firewall preventing you from connecting to the machine, connect using your AD credentials through rdp from a windows desktop. There is small catch. If your using gnome it MAY not work. What might happen is you will initially connect and then as soon as you get a screen lock, the login screen will be hammering away with you unable to type your password in to gain access to your session again

- You might see something like this in your /var/log/messages

2015-08-27T14:15:44.341964-07:00 nicktailor01 gnome-session[10533]: ShellUserVerifier<._userVerifierGot@/usr/share/gnome-

shell/js/gdm/util.js:350

2015-08-27T14:15:44.342139-07:00 nicktailor01 gnome-session[10533]: wrapper@/usr/share/gjs-1.0/lang.js:213

2015-08-27T14:15:44.721076-07:00 bvanhm01 gnome-session[10533]: (gnome-shell:10609): Gjs-WARNING **: JS ERROR: Failed to obtain user

verifier: Gio.DBusError: GDBus.Error:org.freedesktop.DBus.Error.AccessDenied: No session available

2015-08-27T14:15:44.721381-07:00 nicktailor01 gnome-session[10533]: ShellUserVerifier<._userVerifierGot@/usr/share/gnome-

shell/js/gdm/util.js:350

2015-08-27T14:15:44.721553-07:00 nicktailor01 gnome-session[10533]: wrapper@/usr/share/gjs-1.0/lang.js:213

2015-08-27T14:15:45.100944-07:00 nicktailor01 gnome-session[10533]: (gnome-shell:10609): Gjs-WARNING **: JS ERROR: Failed to obtain user

verifier: Gio.DBusError: GDBus.Error:org.freedesktop.DBus.Error.AccessDenied: No session available

- The reason for this appears to be related to a bug with systemd and gnome-shell. I reviewed several online forum cases regarding it, however there was no solid resolution other than downgrading system. Even later updates caused similar issues. Fear not..there is a solution. I found we can simply change the desktop from gnome to a more stable one like XFCE. How do we do this I will show you 🙂

- First install XFCE

- zypper install -t pattern xfce

- Next you want to remove gnome

- zypper rm $(rpm -qa | grep gnome)

Now reboot your machine and you should be able to remote desktop via rdp to your linux machine with no issues from opensusu. I realize this is bit dirty, but it was fun wasn’t it??? 🙂

If you have any questions email nick@nicktailor.com

How to RDP to VNC and authenticate using AD (Redhat 6)

For this we will be setting up VNC server and XRDP which allow you to use windows remote desktop terminal services client to connect to your linux desktop as you would any windows machine with centralized authentication using Active directory.

XRDP is a wonderful Remote Desktop protocol application that allows you to RDP to your servers/workstations from any Windows machine, MAC running an RDP app or even Linux using an RDP app such as Remmina. This was written for the new CentOS 6.5 on 64-bit but should work the same on any 6.x and 5.x Red Hat clone with the correct EPEL repositories.

Virtual Network Computing (VNC) is a graphical desktop sharing system that uses the Remote Frame Buffer protocol (RFB) to remotely control another computer. Essentially the Linux version of windows RDP.

We are going to make them work together so you can RDP from your windows machine to you linux desktop as you would any other windows machine using a windows RDP service. It create an ssh tunnel inside the RDP protocol to get to the vnc server and then authenicate against the active directory domain controller so you dont need to create users individually for vncserver.

First we need to download and install the EPEL repository for your correct version if you do now know what architecture you are using you can verify it with the below command. If the end shows x86_64 then you have a 64-bit install, if it shows i386 then it is a 32-bit install:

|

1

2

|

[root@server ~]# uname -r2.6.32-431.el6.x86_64 |

Once you determine your architecture then you can install the correct EPEL repository with the below commands:

|

1

2

|

wget http://download.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpmrpm -ivh epel-release-6-8.noarch.rpm |

|

1

2

|

wget http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpmrpm -ivh epel-release-6-8.noarch.rpm |

You can verify that the EPEL repository is there by running the below command and you should see the EPEL repository listed as you can see here in line #10 which is highlighted:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@server ~]# yum repolistLoaded plugins: fastestmirror, refresh-packagekit, securityLoading mirror speeds from cached hostfile * base: mirror.thelinuxfix.com * epel: mirror.cogentco.com * extras: centos.mirror.nac.net * updates: centos.mirror.netriplex.comrepo id repo name statusbase CentOS-6 - Base 6,367epel Extra Packages for Enterprise Linux 6 - x86_64 10,220extras CentOS-6 - Extras 14updates CentOS-6 - Updates 286repolist: 16,887 |

Once you have verified the EPEL repository is installed correctly you need to perform the last few steps below this will install XRDP and Tiger VNC Server for you to connect to. The Front end of XRDP uses the RDP protocol and internally it uses VNC to connect and display the Remote Desktop to you.

|

1

2

3

4

5

|

[root@server ~]# yum install xrdp tigervnc-server[root@server ~]# service vncserver start[root@server ~]# service xrdp start[root@server ~]# chkconfig xrdp on[root@server ~]# chkconfig vncserver on |

- If your vncserver did not start..probably due the /etc/sysconfig/vncserver file. You need at least one user and password configured.

- edit the file /etc/sysconfig/vncserver

- add the following below; adjust the users accordingly and save

VNCSERVERARGS[2]=”-geometry 800×600 -nolisten tcp -localhost”

- Now you su to your the user you created

- su ntailora

- then run vncpasswd

- type a complex password twice

- exit back to root by typing exit

- restart vncserver /etc/init.d/vncserver restart

Now to make it so that xrdp will authenticate against AD when creating a ssh tunnel through the rdp protocol.

NOTE: YOU WILL OF HAVE HAD TO FOLLOW MY EARLIER BLOG POST ON “HOW TO ADD A REDHAT SERVER TO ACTIVE DIRECTORY” FOR THIS PORTION TO WORK.

- we just need edit the pam authentication file that was created when xrdp was installed

- /etc/pam.d/xrdp-sesman

auth include password-auth

account include password-auth

session include password-auth

- Make a back up of the file /etc/pam.d/xrdp-sesman

- cp /etc/pam.d/xrdp-sesman /etc/pam.d/xrdp-sesman.bak

- Now copy your system-auth file over the /etc/pam.d/xrdp-sesman

- cp /etc/pam.d/system-auth /etc/pam.d/xrdp-sesman

It should look something like below. Iv bolded the sections that show the sssd authentication section in the file. Now you should be able to use your Active Directory(AD) credentials to authentication when trying to rdp to your linux desktop.

===================================================

#%PAM-1.0

# This file is auto-generated.

# User changes will be destroyed the next time authconfig is run.

auth required pam_env.so

auth sufficient pam_fprintd.so

auth sufficient pam_unix.so nullok try_first_pass

auth requisite pam_succeed_if.so uid >= 500 quiet

auth sufficient pam_sss.so use_first_pass

auth required pam_deny.so

account required pam_unix.so

account sufficient pam_localuser.so

account sufficient pam_succeed_if.so uid < 500 quiet

account [default=bad success=ok user_unknown=ignore] pam_sss.so

account required pam_permit.so

password requisite pam_cracklib.so try_first_pass retry=3

password sufficient pam_unix.so md5 shadow nullok try_first_pass use_authtok

password sufficient pam_sss.so use_authtok

password required pam_deny.so

session optional pam_keyinit.so revoke

session required pam_limits.so

session optional pam_oddjob_mkhomedir.so skel=/etc/skel

session [success=1 default=ignore] pam_succeed_if.so service in crond quiet use_uid

session required pam_unix.so

session optional pam_sss.so

============================================================

Cheers

If you have any questions email nick@nicktailor.com

How to add Redhat Server 6.0 to Active Directory

We will be using sssd/kerberos/ldap to join the server to a domain in Active directory for SSO(Single Sign On Authentication)

Note: After you have successfully deployed a server using kickstart or manually registered a redhat server to satellite, next we need to join the server to domain controller aka Active Directory

The output will look like something this:

Load smb config files from /etc/samba/smb.conf

Loaded services file OK.

Server role: ROLE_DOMAIN_MEMBER

Press enter to see a dump of your service definitions

[global]

workgroup = NICKSTG

realm = NICKSTG.NICKTAILOR.COM

security = ADS

kerberos method = secrets and keytab

log file = /var/log/

client signing = Yes

idmap config * : backend = tdb

Note: If the nets join fails. It will be due to most likely three reasons.

I ran into the NTP issue. Here is how you fix it.

If your server is not registered to satellite

You will need to have the following files configured as such

/etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = NICKSTG.NICKTAILOR.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

NICKSTG.NICKTAILOR.COM = {

kdc = DC1.NICKTAILOR.COM

admin_server = DC1.NICKTAILOR.COM

}

[domain_realm]

.nickstg.nicktailor.com = = NICKSTG.NICKTAILOR.COM

nickstg.nicktailor.com = = NICKSTG.NICKTAILOR.COM

/etc/oddjobd.conf.d/oddjobd-mkhomedir.conf

<?xml version=”1.0″?>

<!– This configuration file snippet controls the oddjob daemon. It

provides access to mkhomedir functionality via a service named

“com.redhat.oddjob_mkhomedir”, which exposes a single object

(“/”).

The object allows the root user to call any of the standard D-Bus

introspection interface’s methods (these are implemented by

oddjobd itself), and also defines an interface named

“com.redhat.oddjob_mkhomedir”, which provides two methods. –>

<oddjobconfig>

<service name=”com.redhat.oddjob_mkhomedir”>

<object name=”/”>

<interface name=”org.freedesktop.DBus.Introspectable”>

<allow min_uid=”0″ max_uid=”0″/>

<!– <method name=”Introspect”/> –>

</interface>

<interface name=”com.redhat.oddjob_mkhomedir”>

<method name=”mkmyhomedir”>

<helper exec=”/usr/libexec/oddjob/mkhomedir -u 0077″

arguments=”0″

prepend_user_name=”yes”/>

<!– no acl entries -> not allowed for anyone –>

</method>

<method name=”mkhomedirfor”>

<helper exec=”/usr/libexec/oddjob/mkhomedir -u 0077″

arguments=”1″/>

<allow user=”root”/>

</method>

</interface>

</object>

</service>

</oddjobconfig>

================================================================================

/etc/pam.d/password-auth-ac

#%PAM-1.0

# This file is auto-generated.

# User changes will be destroyed the next time authconfig is run.

auth required pam_env.so

auth sufficient pam_unix.so nullok try_first_pass

auth requisite pam_succeed_if.so uid >= 500 quiet

auth sufficient pam_sss.so use_first_pass

auth required pam_deny.so

account required pam_unix.so

account sufficient pam_localuser.so

account sufficient pam_succeed_if.so uid < 500 quiet

account [default=bad success=ok user_unknown=ignore] pam_sss.so

account required pam_permit.so

password requisite pam_cracklib.so try_first_pass retry=3

password sufficient pam_unix.so md5 shadow nullok try_first_pass use_authtok

password sufficient pam_sss.so use_authtok

password required pam_deny.so

session optional pam_keyinit.so revoke

session required pam_limits.so

session optional pam_oddjob_mkhomedir.so skel=/etc/skel

session [success=1 default=ignore] pam_succeed_if.so service in crond quiet use_uid

session required pam_unix.so

session optional pam_sss.so

/etc/pam.d/su

#%PAM-1.0

auth sufficient pam_rootok.so

auth [success=2 default=ignore] pam_succeed_if.so use_uid user ingroup grp_technology_integration_servertech_all

auth [success=1 default=ignore] pam_succeed_if.so use_uid user ingroup wheel

auth required pam_deny.so

auth include system-auth

account sufficient pam_succeed_if.so uid = 0 use_uid quiet

account include system-auth

password include system-auth

session include system-auth

session optional pam_xauth.so

#This line is the last line

/etc/pam.d/system-auth-ac

#%PAM-1.0

# This file is auto-generated.

# User changes will be destroyed the next time authconfig is run.

auth required pam_env.so

auth sufficient pam_fprintd.so

auth sufficient pam_unix.so nullok try_first_pass

auth requisite pam_succeed_if.so uid >= 500 quiet

auth sufficient pam_sss.so use_first_pass

auth required pam_deny.so

account required pam_unix.so

account sufficient pam_localuser.so

account sufficient pam_succeed_if.so uid < 500 quiet

account [default=bad success=ok user_unknown=ignore] pam_sss.so

account required pam_permit.so

password requisite pam_cracklib.so try_first_pass retry=3

password sufficient pam_unix.so md5 shadow nullok try_first_pass use_authtok

password sufficient pam_sss.so use_authtok

password required pam_deny.so

session optional pam_keyinit.so revoke

session required pam_limits.so

session optional pam_oddjob_mkhomedir.so skel=/etc/skel

session [success=1 default=ignore] pam_succeed_if.so service in crond quiet use_uid

session required pam_unix.so

session optional pam_sss.so

/etc/samba/smb.conf

[global]

workgroup = NICKSTG

client signing = yes

client use spnego = yes

kerberos method = secrets and keytab

realm = NICKSTG.NICKTAILOR.COM

security = ads

log file = /var/log/

/etc/sssd/sssd.conf

[sssd]

config_file_version = 2

reconnection_retries = 3

sbus_timeout = 30

services = nss, pam

domains = default, nickstg.nicktailor.com

[nss]

filter_groups = root

filter_users = root,bin,daemon,adm,lp,sync,shutdown,halt,mail,news,uucp,operator,games,gopher,ftp,nobody,vcsa,pcap,ntp,dbus,avahi,rpc,sshd,xfs,rpcuser,nfsnobody,haldaemon,avahi-autoipd,gdm,nscd,oracle, ,deploy,tomcat,jboss,apache,ejabberd,cds,distcache,squid,mailnull,smmsp,backup,bb,clam,obdba,postgres,named,mysql,quova, reconnection_retries = 3

[pam]

reconnection_retries = 3

[domain/nickstg.nicktailor.com]

id_provider = ad

access_provider = simple

cache_credentials = true

#ldap_search_base = OU=NICKSTG-Users,DC=NICKSTG,DC=nicktailor,DC=com

override_homedir = /home/%u

default_shell = /bin/bash

simple_allow_groups = ServerTech_All,Server_Systems_Integration

/etc/sudoers

## /etc/sudoers

## nicktailor sudoers configuration

## Include all configuration from /etc/sudoers.d

## Note: the single # is needed in the line below and is NOT a comment!

#includedir /etc/sudoers.d

##%NICKSTG\\domain\ users ALL = NOPASSWD: ALL

% ServerTech_All ALL = NOPASSWD: ALL

% Server_Systems_Integration ALL = NOPASSWD: ALL

How to deploy servers with KickStart 5.0

- Open up Vcenter and login

- Find the folder you wish to create the new vm

- Right click on the folder and select create a new vm

- Go through and select the VM parameters you require ie(CPU, Memory, HD space, etc)

NOTE: that you should keep the HD space to 50 gigs and thin provision the vm.

- Next you want to edit the VM settings

- Select the CD/DVD option and then boot off a redhat linux 6.6 install dvd.

- Enable the connect on start and conneted check boxes at the top.

- Next you want to select the Network adapter and select the correct Network Label(VLAN) so the server will be able to communicate dependant on which ever ip/network you chose.

- Select the CD/DVD option and then boot off a redhat linux 6.6 install dvd.

Note: You will not be able to kickstart if you do not have the proper vlan for your ip.

- Next Login into satellite

- Click on kickstart on the left pane and then profiles

- Select the button “Advanced options

- Scroll down to network and edit the line as needed.

- –bootproto=static –ip=10.2.10.13 –netmask=255.255.255.0 –gateway=10.2.10.254 –hostname=server1.nicktailor.com –nameserver=10.20.0.17.

Note: You need to do this if you want the server provisioned with ip and hostname post install.

- Scroll down and click update for settings to take effect.

- Next click on System Details and then Paritioning.

- Edit the partitions to the specification required. You in most cases wont need to update this will be a standard template. However for the purposes of documentation its here.

Example of standard partition scheme

part /boot –fstype=ext4 –size=500

part pv.local –size=1000 –grow

volgroup vg_local pv.local

logvol / –fstype ext4 –name=root –vgname=vg_local –size=2048

logvol swap –fstype swap –name=swap –vgname=vg_local –recommended

logvol /tmp –fstype ext4 –name=tmp –vgname=vg_local –size=1024

logvol /usr –fstype ext4 –name=usr –vgname=vg_local –size=4096

logvol /home –fstype ext4 –name=home –vgname=vg_local –size=2048

logvol /var –fstype ext4 –name=var –vgname=vg_local –size=4096 –grow

logvol /var/log –fstype ext4 –name=log –vgname=vg_local –size=2048 –grow

logvol /var/log/audit –fstype ext4 –name=audit –vgname=vg_local –size 1024

logvol /opt –fstype ext4 –name=opt –vgname=vg_local –size=4096 –grow

- Once you have the desired setting, select “Update Paritions”

4. Next Select Software

5. You can add or remove any necessary or un-necessary packages.

By using the (-) before the package name it will remove it from the base install. If you simply type in the package name it will ensure its added to the base install.

The packages indicated below are an example of how you

@ Base

@X Window System

@Desktop

@fonts

python-dmidecode

python-ethtool

rhn-check

rhn-client-tools

rhn-setup

rhncfg-actions

rhncfg-client

yum-rhn-plugin

sssd

6. Select update packages once you have chosen your base packages

7. Now boot up the vm, once your cd/image is booted you should see a grub line, before it boots into the install, follow the steps below.

8. At the grub line issue the following command. (Update the ip according to above step as needed. If you are using DHCP then you just need the url without the additional parameters.

linux ks=http://satellite.nicktailor.com/ks/cfg/org/5/label/Kickstartname ip=10.0.12.99 netmask=255.255.255.0 gateway=10.0.12.254 nameserver=10.20.0.17

9. Your VM at this point should go through without any user interaction and install and reboot with a functional OS.

Note: Since you have kickstarted your server using satellite, it will automatically be registered to satellite server, saving you the hassel of doing it after the fact.

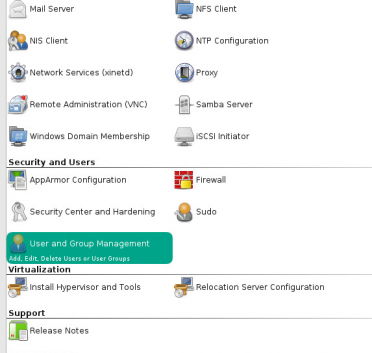

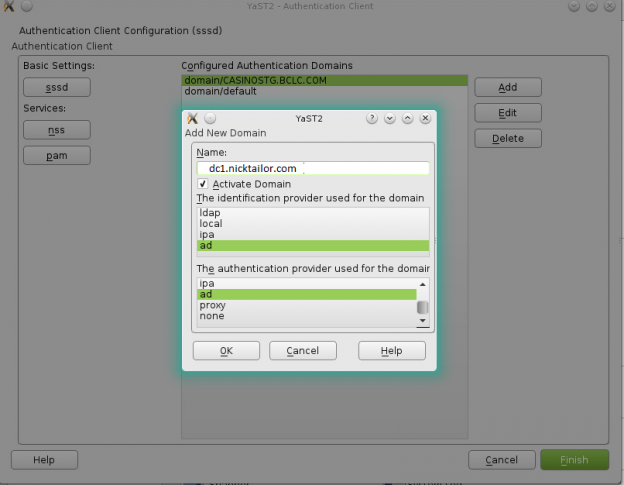

How to join a OpenSuse Host to Active Directory

nameserver 192.168.0.10

nameserver 192.168.0.11

Note: Prior to running these steps you will need to ensure that you have administrator account for the domain controller and have properly setup the dns for the Desktop / Server in Active Directory

How to do a full volume heal with glusterfs

How to fix a split-brain fully

If you nodes get out of sync and you know which node is the correct one.

So if you want node 2 to match Node 1

Follow the following setps:

- gluster volume stop $volumename

- /etc/init.d/glusterfsd stop

- rm -rf /mnt/lv_glusterfs/brick/*

- /etc/init.d/glusterfsd start

- “gluster volume start $volumename force”

- “gluster volume heal $volumename full”

You should see a successful output, and you will start to see the “/mnt/lv_glusterfs/brick/” directory now match node a

Finally you can run.

- gluster volume heal $volumename info split-brain (this will show if there are any splitbrains)

- gluster volume heal $volumename info heal-failed (this will show you files that failed the heal)

Cheers

How to setup GlusterFS Server/Client

Gluster setup Server/client on both nodes

On both machines:

- wget http://www.nicktailor.com/files/redhat6/glusterfs-3.4.0-8.el6.x86_64.rpm

- wget http://www.nicktailor.com/files/redhat6/glusterfs-fuse-3.4.0-8.el6.x86_64.rpm

- wget http://www.nicktailor.com/files/redhat6/glusterfs-server-3.4.0-8.el6.x86_64.rpm

- wget http://www.nicktailor.com/files/redhat6/glusterfs-libs-3.4.0-8.el6.x86_64.rpm

- wget http://www.nicktailor.com/files/redhat6/glusterfs-cli-3.4.0-8.el6.x86_64.rpm

Install GlusterFS Server and Client - yum localinstall -y gluster*.rpm

- yum install fuse

We want to use LVM for the glusterfs, so if we need to increase the size of the volume in future we can do so relatively easily. Repeat these steps on both nodes.

Create your physical volume

- pvcreate /dev/sdb

Create your volume

- vgcreate vg_gluster /dev/sdb

Create the logical volume

- lvcreate -l100%VG -n lv_gluster vg_gluster

Format your volume

- mkfs.ext3 /dev/vg_gluster/lv_gluster

Make the directory for your glusterfs

- mkdir -p /mnt/lv_gluster

Mount the logical volume to your destination

- mount /dev/vg_gluster/lv_gluster /mnt/lv_gluster

Create your brick

- mkdir -p /mnt/lv_gluster/brick

Add to your fstab if you wish for it to automount upon reboots

- echo “” >> /etc/fstab

- echo “/dev/vg_glusterfs/lv_gluster /mnt/lv_gluster ext3 defaults 0 0” >> /etc/fstab

- service glusterd start

- chkconfig glusterd on

Now from server1.nicktailor.com:

Test to ensure you can contact your second node

- gluster peer probe server2.nicktailor.com

Create glusterfs volume name and replication between both nodes

- gluster volume create $volumename replica 2 transport tcp

server1.nicktailor.com:/mnt/lv_gluster/brick server2.nicktailor.com:/mnt/lv_gluster/brick

Start the glusterfs volume

- gluster volume start $volumename

Now on server1.nicktailor.com:

Now we need to make the glusterfs directory from which everything will write to and replicate from.

NOTE: You will not be able to mount the storage unless your glusterfs volume is started

- mkdir /storage

- mount -t glusterfs server1.nicktailor.com:/sftp /storage

Add to these lines for automounting upon reboots

- echo “” >> /etc/fstab

- echo “glusterfs server1.nicktailor.com:/sftp /storage glusterfs defaults,_netdev 0 0” >> /etc/fstab

- echo “” >> /etc/rc.local

- echo “grep -v ‘^\s*#’ /etc/fstab | awk ‘{if (\$3 == \”glusterfs\”) print \$2}’ | xargs mount” >> /etc/rc.local

- echo “mount -t glusterfs server1.nicktailor.com:/sftp /storage” >> /etc/rc.local

Now on server2.nicktailor.com do the following after you install the glusterfs and setup the volume group and start the glusterfs service

- mkdir /storage

- mount -t glusterfs server2.nicktailor.com:/sftp /storage

- echo “” >> /etc/fstab

- echo ” server2.nicktailor.com:/sftp /storage glusterfs defaults 0 0″ >> /etc/fstab (if this doesnt automount use the mount -t line at the bottom in /etc/rc.local instead)

- echo “” >> /etc/rc.local

- echo “grep -v ‘^\s*#’ /etc/fstab | awk ‘{if (\$3 == \”glusterfs\”) print \$2}’ | xargs mount” >> /etc/rc.local

- echo “mount -t glusterfs server2.nicktailor.com:/sftp /storage” >> /etc/rc.local

CheersNick Tailor

If you have questions email nick@nicktailor.com and I will try to answer as soon as I can.

How to do a full restore if you wiped all your LVM’s

I’m sure some of you have had the wonderful opportunity to experience loosing all your LVM info in error. Well all is not lost and there is hope. I will show ya how to restore it.

The beauty of LVM is that is naturally creates a backup of the Logical Volumes in the following location.

- /etc/lvm/archive/

Now If you had just wiped out your LVM and it was simply using one physical disk for all your LVM’s you could simply do a full restore doing the following.

-

-

- vgcfgrestore -f /etc/lvm/archive/(volumegroup to restore) (destination volumegroup)

o (ie.)vgcfgrestore -f /etc/lvm/archive/vg_dev1_006.000001.vg vg_dev

- vgcfgrestore -f /etc/lvm/archive/(volumegroup to restore) (destination volumegroup)

-

If you had multiple disks attached to your volume group then you need to do a couple more things to be able to do a restore.

- Cat the file /etc/lvm/archive/whatevervolumgroup.vg file you should see something like below

- physical_volumes {

pv0 {

id = “ecFWSM-OH8b-uuBB-NVcN-h97f-su1y-nX7jA9”

device = “/dev/sdj” # Hint only

status = [“ALLOCATABLE”]

flags = []

dev_size = 524288000 # 250 Gigabytes

pe_start = 2048

pe_count = 63999 # 249.996 Gigabytes

}

You will need to recreate all the physical volume UUID inside that .vg file for volume group to be able to restore.

-

- pvcreate –restore /etc/lvm/archive/vgfilename.vg –uuid <UUID> <DEVICE>

o (IE) pvcreate –restorefile /etc/lvm/archive/vg_data_00122-1284284804.vg –uuid ecFWSM-OH8b-uuBB-NVcN-h97f-su1y-nX7jA9 /dev/sdj

- pvcreate –restore /etc/lvm/archive/vgfilename.vg –uuid <UUID> <DEVICE>

- Repeat this step for all the physical volumes in the archive vg file until they have all been created.

Once you have completed the above step you should now be able to restore your voluegroups that were wiped

-

- vgcfgrestore -f /etc/lvm/archive/(volumegroup to restore) (destination volumegroup)

o (ie.)vgcfgrestore -f /etc/lvm/archive/vg_dev1_006.000001.vg vg_dev

- vgcfgrestore -f /etc/lvm/archive/(volumegroup to restore) (destination volumegroup)

- Running the command vgdisplay and pvdisplay should show you that everything is back the way it should be

If you have questions email nick@nicktailor.com

Cheers

How to setup NFS server on Centos 6.x

Setup NFS Server in CentOS / RHEL / Scientific Linux 6.3/6.4/6.5

1. Install NFS in Server

- [root@server ~]# yum install nfs* -y

2. Start NFS service

- [root@server ~]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Stopping RPC idmapd: [ OK ]

Starting RPC idmapd: [ OK ]

Starting NFS daemon: [ OK ]

- [root@server ~]# chkconfig nfs on

3. Install NFS in Client

- [root@vpn client]# yum install nfs* -y

4. Start NFS service in client

- [root@vpn client]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: [ OK ]

Stopping RPC idmapd: [ OK ]

Starting RPC idmapd: [ OK ]

Starting NFS daemon: [ OK ]

- [root@vpn client]# chkconfig nfs on

5. Create shared directories in server

Let us create a shared directory called ‘/home/nicktailor’ in server and let the client users to read and write files in the ‘home/nicktailor’ directory.

- [root@server ~]# mkdir /home/nicktailor

- [root@server ~]# chmod 755 /home/nicktailor/

6. Export shared directory on server

Open /etc/exports file and add the entry as shown below

- [root@server ~]# vi /etc/exports

- add the following below

- /home/nicktailor 192.168.1.0/24(rw,sync,no_root_squash,no_all_squash)

where,

/home/nicktailor – shared directory

192.168.1.0/24 – IP address range of clients to access the shared folder

rw – Make the shared folder to be writable

sync – Synchronize shared directory whenever create new files/folders

no_root_squash – Enable root privilege (Users can read, write and delete the files in the shared directory)

no_all_squash – Enable user’s authority

Now restart the NFS service.

- [root@server ~]# /etc/init.d/nfs restart

Shutting down NFS daemon: [ OK ]

Shutting down NFS mountd: [ OK ]

Shutting down NFS services: [ OK ]

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Stopping RPC idmapd: [ OK ]

Starting RPC idmapd: [ OK ]

Starting NFS daemon: [ OK ] –

7. Mount shared directories in client

Create a mount point to mount the shared directories of server.

To do that create a directory called ‘/nfs/shared’ (You can create your own mount point)

- [root@vpn client]# mkdir -p /nfs/shared

Now mount the shared directories from server as shown below

- [root@vpn client]# mount -t nfs 192.168.1.200:/home/nicktailor/ /nfs/shared/

This will take a while and shows a connection timed out error for me. Well, don’t panic, firewall might be restricting the clients to mount shares from server. Simply stop the iptables to rectify the problem or you can allow the NFS service ports through iptables.

To do that open the /etc/sysconfig/nfs file and uncomment the lines which are marked in bold.

- [root@server ~]# vi /etc/sysconfig/nfs

#

# Define which protocol versions mountd

# will advertise. The values are “no” or “yes”

# with yes being the default

#MOUNTD_NFS_V2=”no”

#MOUNTD_NFS_V3=”no”

#

#

# Path to remote quota server. See rquotad(8)

#RQUOTAD=”/usr/sbin/rpc.rquotad”

# Port rquotad should listen on.

RQUOTAD_PORT=875

# Optinal options passed to rquotad

#RPCRQUOTADOPTS=””

#

#

# Optional arguments passed to in-kernel lockd

#LOCKDARG=

# TCP port rpc.lockd should listen on.

LOCKD_TCPPORT=32803

# UDP port rpc.lockd should listen on.

LOCKD_UDPPORT=32769

#

#

# Optional arguments passed to rpc.nfsd. See rpc.nfsd(8)

# Turn off v2 and v3 protocol support

#RPCNFSDARGS=”-N 2 -N 3″

# Turn off v4 protocol support

#RPCNFSDARGS=”-N 4″

# Number of nfs server processes to be started.

# The default is 8.

#RPCNFSDCOUNT=8

# Stop the nfsd module from being pre-loaded

#NFSD_MODULE=”noload”

# Set V4 grace period in seconds

#NFSD_V4_GRACE=90

#

#

#

# Optional arguments passed to rpc.mountd. See rpc.mountd(8)

#RPCMOUNTDOPTS=””

# Port rpc.mountd should listen on.

MOUNTD_PORT=892

#

#

# Optional arguments passed to rpc.statd. See rpc.statd(8)

#STATDARG=””

# Port rpc.statd should listen on.

STATD_PORT=662

# Outgoing port statd should used. The default is port

# is random

STATD_OUTGOING_PORT=2020

# Specify callout program

#STATD_HA_CALLOUT=”/usr/local/bin/foo”

#

#

# Optional arguments passed to rpc.idmapd. See rpc.idmapd(8)

#RPCIDMAPDARGS=””

#

# Set to turn on Secure NFS mounts.

#SECURE_NFS=”yes”

# Optional arguments passed to rpc.gssd. See rpc.gssd(8)

#RPCGSSDARGS=””

# Optional arguments passed to rpc.svcgssd. See rpc.svcgssd(8)

#RPCSVCGSSDARGS=””

#

# To enable RDMA support on the server by setting this to

# the port the server should listen on

#RDMA_PORT=20049

Now restart the NFS service

- [root@server ~]# /etc/init.d/nfs restart

Shutting down NFS daemon: [ OK ]

Shutting down NFS mountd: [ OK ]

Shutting down NFS services: [ OK ]

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Stopping RPC idmapd: [ OK ]

Starting RPC idmapd: [ OK ]

Starting NFS daemon: [ OK ]

Add the lines shown in bold in ‘/etc/sysconfig/iptables’ file.

- [root@server ~]# vi /etc/sysconfig/iptables

# Firewall configuration written by system-config-firewall

# Manual customization of this file is not recommended.

*filter

-A INPUT -m state –state NEW -m udp -p udp –dport 2049 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 2049 -j ACCEPT

-A INPUT -m state –state NEW -m udp -p udp –dport 111 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 111 -j ACCEPT

-A INPUT -m state –state NEW -m udp -p udp –dport 32769 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 32803 -j ACCEPT

-A INPUT -m state –state NEW -m udp -p udp –dport 892 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 892 -j ACCEPT

-A INPUT -m state –state NEW -m udp -p udp –dport 875 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 875 -j ACCEPT

-A INPUT -m state –state NEW -m udp -p udp –dport 662 -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 662 -j ACCEPT

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

-A INPUT -m state –state ESTABLISHED,RELATED -j ACCEPT

-A INPUT -p icmp -j ACCEPT

-A INPUT -i lo -j ACCEPT

-A INPUT -m state –state NEW -m tcp -p tcp –dport 22 -j ACCEPT

-A INPUT -j REJECT –reject-with icmp-host-prohibited

-A FORWARD -j REJECT –reject-with icmp-host-prohibited

COMMIT

Now restart the iptables service

[root@server ~]# service iptables restart

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Unloading modules: [ OK ]

iptables: Applying firewall rules: [ OK ]

Again mount the share from client

- [root@vpn client]# mount -t nfs 192.168.1.200:/home/nicktailor/ /nfs/shared/

Finally the NFS share is mounted without any connection timed out error.

To verify whether the shared directory is mounted, enter the mount command in client system.

- [root@vpn client]# mount

/dev/mapper/vg_vpn-lv_root on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw,rootcontext=”system_u:object_r:tmpfs_t:s0″)

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

nfsd on /proc/fs/nfsd type nfsd (rw)

192.168.1.200:/home/ostechnix/ on /nfs/shared type nfs (rw,vers=4,addr=192.168.1.200,clientaddr=192.168.1.29)

8. Testing NFS

Now create some files or folders in the ‘/nfs/shared’ directory which we mounted in the previous step.

- [root@vpn shared]# mkdir test

- [root@vpn shared]# touch file1 file2 file3

Now go to the server and change to the ‘/home/nicktailor’ directory.

[root@server ~]# cd /home/nicktailor/

- [root@server nicktailor]# ls

file1 file2 file3 test

- [root@server nicktailor]#

Now the files and directories are listed which are created from the client. Also you can share the files from server to client and vice versa.

9. Automount the Shares

If you want to mount the shares automatically instead mounting them manually at every reboot, add the following lines shown in bold in the ‘/etc/fstab’ file of client system.

- [root@vpn client]# vi /etc/fstab

#

# /etc/fstab

# Created by anaconda on Wed Feb 27 15:35:14 2013

#

# Accessible filesystems, by reference, are maintained under ‘/dev/disk’

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/vg_vpn-lv_root / ext4 defaults 1 1

UUID=59411b1a-d116-4e52-9382-51ff6e252cfb /boot ext4 defaults 1 2

/dev/mapper/vg_vpn-lv_swap swap swap defaults 0 0

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

192.168.1.200:/home/nicktailor/nfs/sharednfsrw,sync,hard,intr0 0

10. Verify the Shares

Reboot your client system and verify whether the share is mounted automatically or not.

- [root@vpn client]# mount

/dev/mapper/vg_vpn-lv_root on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw,rootcontext=”system_u:object_r:tmpfs_t:s0″)

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

192.168.1.200:/home/nicktailor on /nfs/shared type nfs (rw,sync,hard,intr,vers=4,addr=192.168.1.200,clientaddr=192.168.1.29)

nfsd on /proc/fs/nfsd type nfsd (rw)

How to setup a NFS server on Debian

DEBIAN SETUP

Make sure you have NFS server support in your server’s kernel (kernel module named “knfsd.ko” under your /lib/modules/uname -r/ directory structure)

$ grep NFSD /boot/config-`uname -r`

or similar (wherever you’ve stashed your config file, for example, perhaps in /usr/src/linux/.config.)

There are at ltwo mainstream NFS server implementations that people use (excluding those implemented in Python and similar): one implemented in user space, which is slower however easier to debug, and the other implemented in kernel space, which is faster. Below shows the setup of the kernel-space one. If you wish to use the user-space server, then install the similarly-named package.

First, the packages to begin with:

- $ aptitude install nfs-kernel-server portmap

Note that portmap defaults to only listening for NFS connection attempts on 127.0.0.1 (localhost), so if you wish to allow connections on your local network, then you need to edit /etc/default/portmap, to comment out the “OPTIONS” line. Also, we need to ensure that the /etc/hosts.allow file allows connections to the portmap port. For example:

2. Now run the following commands. This will edit the portmap configuration file and all

the subnet in your hosts.allow for which ever subnet is nfs server is on

- $ perl -pi -e ‘s/^OPTIONS/#OPTIONS/’ /etc/default/portmap

- $ echo “portmap: 192.168.1.” >> /etc/hosts.allow

- $ /etc/init.d/portmap restart

- $ echo “rpcbind: ALL” >> /etc/hosts.allow

See ‘man hosts.allow’ for examples on the syntax. But in general, specifying only part of the IP address like this (leaving the trailing period) treats the specified IP address fragment as a wildcard, allowing all IP addresses in the range 192.168.1.0 to 192.168.1.255 (in this example.) You can do more “wildcarding” using DNS names, and so on too.

- Then, edit the /etc/exports file, which lists the server’s filesystems to export over NFS to client machines. The following example shows the addition of a line which adds the path “/example”, for access by any machine on the local network (here 192.168.1.*).

- $ echo “/example 192.168.1.0/255.255.255.0(rw,no_root_squash,subtree_check)” >> /etc/exports

- $ /etc/init.d/nfs-kernel-server reload

This tells the server to serve up that path, readable/writable, with root-user-id connecting clients to use root access instead of being mapped to ‘nobody’, and to use the ‘subtree_check’ to silence a warning message. Then, reloads the server.

6. On the Client server you wish to mount to the NFS share type the following

- $ mount 192.168.1.100:/example /mnt/example

Result should look like this if you type

- $mount <enter>

/dev/sda3 on / type ext4 (rw,errors=remount-ro)

tmpfs on /lib/init/rw type tmpfs (rw,nosuid,mode=0755)

proc on /proc type proc (rw,noexec,nosuid,nodev)

sysfs on /sys type sysfs (rw,noexec,nosuid,nodev)

udev on /dev type tmpfs (rw,mode=0755)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)

devpts on /dev/pts type devpts (rw,noexec,nosuid,gid=5,mode=620)

/dev/sda1 on /tmp type ext4 (rw)

rpc_pipefs on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

nfsd on /proc/fs/nfsd type nfsd (rw)

192.168.1.100:/nicktest on /mnt/nfs type nfs (rw,nolock,addr=192.168.1.100)